| (13 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | [ | + | <center><font size= 4> |

| + | '''[[ECE662]]: Statistical Pattern Recognition and Decision Making Processes''' | ||

| + | </font size> | ||

| − | We have seen Nearest Neighbor (NN) error rate as the number of samples approaches infinity is <math>P=\int(1-\sum_{i=1}^c P^2(w_i|\vec{x}))p(\vec{x})d\vec{x}</math> | + | Spring 2008, [[user:mboutin|Prof. Boutin]] |

| + | |||

| + | [[Slectures|Slecture]] | ||

| + | |||

| + | <font size= 3> Collectively created by the students in [[ECE662:BoutinSpring08_OldKiwi|the class]]</font size> | ||

| + | </center> | ||

| + | |||

| + | ---- | ||

| + | =Lecture 19 Lecture notes= | ||

| + | Jump to: [[ECE662_Pattern_Recognition_Decision_Making_Processes_Spring2008_sLecture_collective|Outline]]| | ||

| + | [[Lecture 1 - Introduction_OldKiwi|1]]| | ||

| + | [[Lecture 2 - Decision Hypersurfaces_OldKiwi|2]]| | ||

| + | [[Lecture 3 - Bayes classification_OldKiwi|3]]| | ||

| + | [[Lecture 4 - Bayes Classification_OldKiwi|4]]| | ||

| + | [[Lecture 5 - Discriminant Functions_OldKiwi|5]]| | ||

| + | [[Lecture 6 - Discriminant Functions_OldKiwi|6]]| | ||

| + | [[Lecture 7 - MLE and BPE_OldKiwi|7]]| | ||

| + | [[Lecture 8 - MLE, BPE and Linear Discriminant Functions_OldKiwi|8]]| | ||

| + | [[Lecture 9 - Linear Discriminant Functions_OldKiwi|9]]| | ||

| + | [[Lecture 10 - Batch Perceptron and Fisher Linear Discriminant_OldKiwi|10]]| | ||

| + | [[Lecture 11 - Fischer's Linear Discriminant again_OldKiwi|11]]| | ||

| + | [[Lecture 12 - Support Vector Machine and Quadratic Optimization Problem_OldKiwi|12]]| | ||

| + | [[Lecture 13 - Kernel function for SVMs and ANNs introduction_OldKiwi|13]]| | ||

| + | [[Lecture 14 - ANNs, Non-parametric Density Estimation (Parzen Window)_OldKiwi|14]]| | ||

| + | [[Lecture 15 - Parzen Window Method_OldKiwi|15]]| | ||

| + | [[Lecture 16 - Parzen Window Method and K-nearest Neighbor Density Estimate_OldKiwi|16]]| | ||

| + | [[Lecture 17 - Nearest Neighbors Clarification Rule and Metrics_OldKiwi|17]]| | ||

| + | [[Lecture 18 - Nearest Neighbors Clarification Rule and Metrics(Continued)_OldKiwi|18]]| | ||

| + | [[Lecture 19 - Nearest Neighbor Error Rates_OldKiwi|19]]| | ||

| + | [[Lecture 20 - Density Estimation using Series Expansion and Decision Trees_OldKiwi|20]]| | ||

| + | [[Lecture 21 - Decision Trees(Continued)_OldKiwi|21]]| | ||

| + | [[Lecture 22 - Decision Trees and Clustering_OldKiwi|22]]| | ||

| + | [[Lecture 23 - Spanning Trees_OldKiwi|23]]| | ||

| + | [[Lecture 24 - Clustering and Hierarchical Clustering_OldKiwi|24]]| | ||

| + | [[Lecture 25 - Clustering Algorithms_OldKiwi|25]]| | ||

| + | [[Lecture 26 - Statistical Clustering Methods_OldKiwi|26]]| | ||

| + | [[Lecture 27 - Clustering by finding valleys of densities_OldKiwi|27]]| | ||

| + | [[Lecture 28 - Final lecture_OldKiwi|28]] | ||

| + | ---- | ||

| + | ---- | ||

| + | We have seen Nearest Neighbor (NN) error rate as the number of samples approaches infinity is | ||

| + | |||

| + | <math>P=\int(1-\sum_{i=1}^c P^2(w_i|\vec{x}))p(\vec{x})d\vec{x}</math> | ||

We would like to be able to answer two questions: | We would like to be able to answer two questions: | ||

| Line 63: | Line 107: | ||

<math>=2P^*(e|\vec{x})-\frac{c}{c-1}(P^*(e|\vec{x}))^2</math> | <math>=2P^*(e|\vec{x})-\frac{c}{c-1}(P^*(e|\vec{x}))^2</math> | ||

| − | '''To get better bound''' | + | '''To get better bound, observe''' |

| − | + | <math>Var(P^*(e|\vec{x})\geq 0</math> | |

| − | + | ||

| + | <math> => \int(p^*(e|\vec x)-p^*)^2p(\vec x)dx\geq 0</math> | ||

| + | <math> => \int ({p}^{*2}(e|\vec x)-2p*p*(e|\vec x)+{p}^{*2} p(\vec x)dx </math> | ||

| + | |||

| + | <math> => \int {p}^{*2}(e|\vec x)p(x) dx - 2p* \int p*(e| \vec x)p(\vec x)dx + {p}^{*2} \int p(\vec x)dx) \geq 0 </math> | ||

| + | |||

| + | (where, <math> \int p*(e|\vec x)p(\vec x)dx </math> should be changed as <math>\int p*(e|\vec x)p(\vec x)dx = p* </math> ) | ||

| + | |||

| + | <math> \int {p}^{*2}(e|\vec x)p(\vec x) dx \geq {p}^{*2} </math> | ||

| + | |||

| + | (This is equal only if variance = 0) | ||

| + | |||

| + | so, <math> p\leq \int (2p^*(e| \vec x)- \frac{c}{c-1} {p}^{*2}(e|\vec{x}) ) p(\vec x)dx </math> | ||

| + | |||

| + | <math> = 2 \int p* (e|vec x)p(\vec x) dx - \frac{c}{c-1} \int {p}^{*2}(e|\vec x)p(\vec x) dx </math> | ||

| + | |||

| + | <math> \leq 2p* - \frac {c}{c-1} {p}^{*2} </math> | ||

| + | |||

| + | <math> = (2- \frac{c}{c-1} p* ) p^* </math> | ||

| + | |||

| + | <math> c=2, p^* \leq p \leq p^* (2-sp^*) </math> | ||

| + | |||

| + | [[Image:park213_OldKiwi.jpg]] | ||

| + | |||

| + | [[Image:Lec19_fish_OldKiwi.PNG]] | ||

| + | Figure 1 | ||

| + | ---- | ||

| + | Previous: [[Lecture_18_-_Nearest_Neighbors_Clarification_Rule_and_Metrics(Continued)_OldKiwi|Lecture 18]] | ||

| + | Next: [[Lecture_20_-_Density_Estimation_using_Series_Expansion_and_Decision_Trees_OldKiwi|Lecture 20]] | ||

| − | + | [[ECE662:BoutinSpring08_OldKiwi|Back to ECE662 Spring 2008 Prof. Boutin]] | |

| − | [ | + | [[Category:ECE662]] |

| − | [ | + | [[Category:decision theory]] |

| − | + | [[Category:lecture notes]] | |

| − | + | [[Category:pattern recognition]] | |

| + | [[Category:slecture]] | ||

Latest revision as of 11:22, 10 June 2013

ECE662: Statistical Pattern Recognition and Decision Making Processes

Spring 2008, Prof. Boutin

Collectively created by the students in the class

Lecture 19 Lecture notes

Jump to: Outline| 1| 2| 3| 4| 5| 6| 7| 8| 9| 10| 11| 12| 13| 14| 15| 16| 17| 18| 19| 20| 21| 22| 23| 24| 25| 26| 27| 28

We have seen Nearest Neighbor (NN) error rate as the number of samples approaches infinity is

$ P=\int(1-\sum_{i=1}^c P^2(w_i|\vec{x}))p(\vec{x})d\vec{x} $

We would like to be able to answer two questions:

1) How good is that in terms of error rate?

2) How does it compare to Bayes, the best error rate we can achieve?

Recall error rate is $ P(e)=\int P(e|\vec{x})p(\vec{x})d\vec{x} $. For all x, Bayes rule yields minimum possible $ P(e|\vec{x})=:P^*(e|\vec{x}) $

Thus, we get the minimum $ P(e)=:P^*=\int P^*(e|\vec{x})p(\vec{x})d\vec{x} $

Claim 1: If $ P^* $ is low, then $ P\approx 2P^* $ (Assumes $ \infty $ number of samples.)

Justification: $ P^*(e|\vec{x})=1-P(w_{max}|\vec{x}) $, where $ w_{max} $ is such that $ P(w_{max}|\vec{x})\geq P(w_j|\vec{x}),\forall j $

So, $ P^* $ low => $ p^*(e|\vec{x}) $ is low for almost every x.

=> $ P(w_{max}|\vec{x}) $ is close to 1 for almost every x.

We have $ P=\int(1-\sum_{i=1}^cP^2(w_i|\vec{x}))p(\vec{x})d\vec{x} $ and for almost every x, $ 1-\sum_{i=1}^cP^2(w_i|\vec{x})\approx 1-P^2(w_{max}|\vec{x})\approx 2(1-P(w_{max}|\vec{x})) $, by Taylor expansion

$ =2(P^*(e|\vec{x}) $

=> $ P\approx\int 2P^*(e|\vec{x})p(\vec{x})d\vec{x}=2P^* $

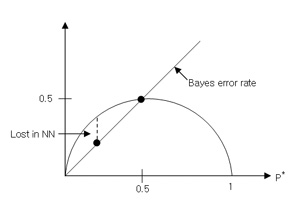

Claim 2: $ P^*\leq P\leq (2-\frac{c}{c-1}P^*)P^* $

$ P^*\leq P $ obvious can't beat Bayes. In fact, tight!

for RHS inequality $ P=\int(1-\sum_{i=1}^cP^2(w_i|\vec{x}))p(\vec{x})d\vec{x} $

Find the lower bound for this $ \sum_{i=1}^cP^2(w_i|\vec{x}) $

Write $ \sum_{i=1}^cP^2(w_i|\vec{x})=P^2(w_m|\vec{x})+\sum_{i\neq m}P^2(w_i|\vec{x}) $

Minimize this $ \sum_{i\neq m}P^2(w_i|\vec{x}) $

under the constraint $ \sum_{i\neq m}P(w_i|\vec{x})=1-P(w_m|\vec{x})=P^*(e|\vec{x}) $

min is attained when

$ P(w_i|\vec{x})=\frac{P^*(e|\vec{x})}{c-1},\forall i $

So we have

$ \sum_{i=1}^cP^2(w_i|\vec{x})\geq (1-P^*(e|\vec{x}))^2 +(c-1)(\frac {P^*(e|\vec{x})}{c-1})^2 $

$ =(1-P^*(e|\vec{x}))^2 +\frac{(P^*(e|\vec{x}))^2}{c-1} $

$ =1-2P^*(e|\vec{x})+(P^*(e|\vec{x}))^2 +\frac{(P^*(e|\vec{x}))^2}{c-1} $

$ =1-2P^*(e|\vec{x})+\frac{c}{c-1}(P^*(e|\vec{x}))^2 $

Inside the $ \int $

$ 1-\sum_{i=1}^cP^2(w_i|\vec{x}))p(\vec{x})\leq 1-(1-2P^*(e|\vec{x})+\frac{c}{c-1}(P^*(e|\vec{x}))^2) $

$ =2P^*(e|\vec{x})-\frac{c}{c-1}(P^*(e|\vec{x}))^2 $

To get better bound, observe

$ Var(P^*(e|\vec{x})\geq 0 $

$ => \int(p^*(e|\vec x)-p^*)^2p(\vec x)dx\geq 0 $

$ => \int ({p}^{*2}(e|\vec x)-2p*p*(e|\vec x)+{p}^{*2} p(\vec x)dx $

$ => \int {p}^{*2}(e|\vec x)p(x) dx - 2p* \int p*(e| \vec x)p(\vec x)dx + {p}^{*2} \int p(\vec x)dx) \geq 0 $

(where, $ \int p*(e|\vec x)p(\vec x)dx $ should be changed as $ \int p*(e|\vec x)p(\vec x)dx = p* $ )

$ \int {p}^{*2}(e|\vec x)p(\vec x) dx \geq {p}^{*2} $

(This is equal only if variance = 0)

so, $ p\leq \int (2p^*(e| \vec x)- \frac{c}{c-1} {p}^{*2}(e|\vec{x}) ) p(\vec x)dx $

$ = 2 \int p* (e|vec x)p(\vec x) dx - \frac{c}{c-1} \int {p}^{*2}(e|\vec x)p(\vec x) dx $

$ \leq 2p* - \frac {c}{c-1} {p}^{*2} $

$ = (2- \frac{c}{c-1} p* ) p^* $

$ c=2, p^* \leq p \leq p^* (2-sp^*) $

Previous: Lecture 18 Next: Lecture 20