(→Lectures) |

|||

| (9 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | [ | + | <center><font size= 4> |

| + | '''[[ECE662]]: Statistical Pattern Recognition and Decision Making Processes''' | ||

| + | </font size> | ||

| − | [ | + | Spring 2008, [[user:mboutin|Prof. Boutin]] |

| − | + | [[Slectures|Slecture]] | |

| − | + | <font size= 3> Collectively created by the students in [[ECE662:BoutinSpring08_OldKiwi|the class]]</font size> | |

| + | </center> | ||

| + | ---- | ||

| + | =Lecture 10 Lecture notes= | ||

| + | Jump to: [[ECE662_Pattern_Recognition_Decision_Making_Processes_Spring2008_sLecture_collective|Outline]]| | ||

| + | [[Lecture 1 - Introduction_OldKiwi|1]]| | ||

| + | [[Lecture 2 - Decision Hypersurfaces_OldKiwi|2]]| | ||

| + | [[Lecture 3 - Bayes classification_OldKiwi|3]]| | ||

| + | [[Lecture 4 - Bayes Classification_OldKiwi|4]]| | ||

| + | [[Lecture 5 - Discriminant Functions_OldKiwi|5]]| | ||

| + | [[Lecture 6 - Discriminant Functions_OldKiwi|6]]| | ||

| + | [[Lecture 7 - MLE and BPE_OldKiwi|7]]| | ||

| + | [[Lecture 8 - MLE, BPE and Linear Discriminant Functions_OldKiwi|8]]| | ||

| + | [[Lecture 9 - Linear Discriminant Functions_OldKiwi|9]]| | ||

| + | [[Lecture 10 - Batch Perceptron and Fisher Linear Discriminant_OldKiwi|10]]| | ||

| + | [[Lecture 11 - Fischer's Linear Discriminant again_OldKiwi|11]]| | ||

| + | [[Lecture 12 - Support Vector Machine and Quadratic Optimization Problem_OldKiwi|12]]| | ||

| + | [[Lecture 13 - Kernel function for SVMs and ANNs introduction_OldKiwi|13]]| | ||

| + | [[Lecture 14 - ANNs, Non-parametric Density Estimation (Parzen Window)_OldKiwi|14]]| | ||

| + | [[Lecture 15 - Parzen Window Method_OldKiwi|15]]| | ||

| + | [[Lecture 16 - Parzen Window Method and K-nearest Neighbor Density Estimate_OldKiwi|16]]| | ||

| + | [[Lecture 17 - Nearest Neighbors Clarification Rule and Metrics_OldKiwi|17]]| | ||

| + | [[Lecture 18 - Nearest Neighbors Clarification Rule and Metrics(Continued)_OldKiwi|18]]| | ||

| + | [[Lecture 19 - Nearest Neighbor Error Rates_OldKiwi|19]]| | ||

| + | [[Lecture 20 - Density Estimation using Series Expansion and Decision Trees_OldKiwi|20]]| | ||

| + | [[Lecture 21 - Decision Trees(Continued)_OldKiwi|21]]| | ||

| + | [[Lecture 22 - Decision Trees and Clustering_OldKiwi|22]]| | ||

| + | [[Lecture 23 - Spanning Trees_OldKiwi|23]]| | ||

| + | [[Lecture 24 - Clustering and Hierarchical Clustering_OldKiwi|24]]| | ||

| + | [[Lecture 25 - Clustering Algorithms_OldKiwi|25]]| | ||

| + | [[Lecture 26 - Statistical Clustering Methods_OldKiwi|26]]| | ||

| + | [[Lecture 27 - Clustering by finding valleys of densities_OldKiwi|27]]| | ||

| + | [[Lecture 28 - Final lecture_OldKiwi|28]] | ||

| + | ---- | ||

| + | ---- | ||

| + | The perceptron algorithm maps an input to a single binary output value. | ||

| + | |||

| + | For a proof of the Perceptron convergence theorem, see this page: | ||

| + | : [[Perceptron_Convergence_Theorem_OldKiwi|Perceptron convergence proof]] | ||

| + | First introduced in [[Lecture_9_-_Linear_Discriminant_Functions_OldKiwi|Lecture 9]]. The gradient descent algorithm used is discussed in this lecture. | ||

== Gradient Descent == | == Gradient Descent == | ||

| − | Main article: [[Gradient Descent_OldKiwi]] | + | Main article: [[Gradient Descent_OldKiwi|Gradient Descent]] |

Consider the cost function <math>J_p(\vec{c}) = \sum -\vec{c}y_i</math>, where <math>y_i</math> is the misclassified data. | Consider the cost function <math>J_p(\vec{c}) = \sum -\vec{c}y_i</math>, where <math>y_i</math> is the misclassified data. | ||

| Line 28: | Line 69: | ||

== Gradient Descent in the Perceptron Algorithm == | == Gradient Descent in the Perceptron Algorithm == | ||

| − | * Theorem: If samples are linearly separable, then the "batch [perceptron]" iterative algorithm. The proof of this theorem, | + | * Theorem: If samples are linearly separable, then the "batch [[Perceptron_Old_Kiwi|perceptron]]" iterative algorithm. The proof of this theorem, [[Perceptron_Convergence_Theorem_Old_Kiwi|Perceptron_Convergence_Theorem]] |

| + | , is due to Novikoff (1962). | ||

<math>\vec{c_{k+1}} = \vec{c_k} + cst \sum y_i</math>, where <math>y_i</math> is the misclassified data, terminates after a finite number of steps. | <math>\vec{c_{k+1}} = \vec{c_k} + cst \sum y_i</math>, where <math>y_i</math> is the misclassified data, terminates after a finite number of steps. | ||

| Line 56: | Line 98: | ||

==Fischer's Linear Discriminant== | ==Fischer's Linear Discriminant== | ||

| − | Main article: [[Fisher Linear Discriminant_OldKiwi]] | + | Main article: [[Fisher Linear Discriminant_OldKiwi|Fisher Linear Discriminant]] |

Fischer's Linear Discriminant solves a dual problem: Traditionally, we have defined a separating hyperplane. Fischer's linear discriminant defines a projection which reduced the data to a single dimension. | Fischer's Linear Discriminant solves a dual problem: Traditionally, we have defined a separating hyperplane. Fischer's linear discriminant defines a projection which reduced the data to a single dimension. | ||

Fischer's Linear Discriminant optimizes the between class-spread. | Fischer's Linear Discriminant optimizes the between class-spread. | ||

| + | ---- | ||

| + | Previous: [[Lecture_9_-_Linear_Discriminant_Functions_OldKiwi|Lecture 9]] | ||

| + | Next: [[Lecture_11_-_Fischer's_Linear_Discriminant_again_OldKiwi|Lecture 11]] | ||

| − | + | [[ECE662:BoutinSpring08_OldKiwi|Back to ECE662 Spring 2008 Prof. Boutin]] | |

| − | + | [[Category:ECE662]] | |

| − | [ | + | [[Category:decision theory]] |

| − | [ | + | [[Category:lecture notes]] |

| − | + | [[Category:pattern recognition]] | |

| − | + | [[Category:slecture]] | |

Latest revision as of 11:18, 10 June 2013

ECE662: Statistical Pattern Recognition and Decision Making Processes

Spring 2008, Prof. Boutin

Collectively created by the students in the class

Contents

Lecture 10 Lecture notes

Jump to: Outline| 1| 2| 3| 4| 5| 6| 7| 8| 9| 10| 11| 12| 13| 14| 15| 16| 17| 18| 19| 20| 21| 22| 23| 24| 25| 26| 27| 28

The perceptron algorithm maps an input to a single binary output value.

For a proof of the Perceptron convergence theorem, see this page:

First introduced in Lecture 9. The gradient descent algorithm used is discussed in this lecture.

Gradient Descent

Main article: Gradient Descent

Consider the cost function $ J_p(\vec{c}) = \sum -\vec{c}y_i $, where $ y_i $ is the misclassified data.

We use the gradient descent procedure to minimize $ J_p(\vec{c}) $.

Compute $ \nabla J_p(\vec{c}) = ... = - \sum y_i $.

Follow basic gradient descent procedure:

- Initial guess $ \vec{c_1} $

- Then, update $ \vec{c_2} = \vec{c_1} - \eta(1) \nabla J_p(\vec{c}) $, where $ \eta(1) $ is the step size

- Iterate $ \vec{c_{k+1}} = \vec{c_{k}} - \eta(k) \nabla J_p(\vec{c}) $until it "converges"

( e.g when $ \eta(k) \nabla J_p(\vec{c}) $< threshold )

Gradient Descent in the Perceptron Algorithm

- Theorem: If samples are linearly separable, then the "batch perceptron" iterative algorithm. The proof of this theorem, Perceptron_Convergence_Theorem

, is due to Novikoff (1962).

$ \vec{c_{k+1}} = \vec{c_k} + cst \sum y_i $, where $ y_i $ is the misclassified data, terminates after a finite number of steps.

But, in practice, we do not have linear separable data. So instead, we use the Least Squares Procedure.

We want $ \vec{c} \cdot y_i > 0 $, for all samples $ y_i $. This is a linear inequality problem which is usually hard to solve. Therefore, we need to convert this problem into a linear equality problem.

We choose $ b_i $ > 0 and solve $ \vec{c} \cdot y_i = b_i $, for all i

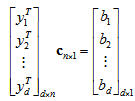

The matrix equation has the following form:

This can also be written as $ \vec{Y} \cdot \vec{c} = \vec{b} $

If d=n, and $ \vec{y_1} $,..., $ \vec{y_d} $ are "generic" ( i.e. determinant of $ \vec{Y} $ is not 0), then we "can" solve by matrix inversion.

If d > n, over-constrained system (there is no solution in the generic case). This is the case where there is more data than you need, and the information is contradictory. In this case, we seek to minimize $ || Y \vec{c} - \vec{b} ||_{L_2} $. The solution is given by $ \vec{c} = (Y^{\top}Y)^{-1}Y^{\top}b $, if $ |Y^{\top}y| \ne 0 $.

If $ |Y^{\top}y| = 0 $, $ \vec{c} = lim (Y^{\top}Y + \epsilon1)^{-1}Y^{\top}b $ always exists!

Fischer's Linear Discriminant

Main article: Fisher Linear Discriminant

Fischer's Linear Discriminant solves a dual problem: Traditionally, we have defined a separating hyperplane. Fischer's linear discriminant defines a projection which reduced the data to a single dimension.

Fischer's Linear Discriminant optimizes the between class-spread.

Previous: Lecture 9 Next: Lecture 11