| Line 15: | Line 15: | ||

Partly based on the [[2014_Spring_ECE_662_Boutin|ECE662 Spring 2014 lecture]] material of [[user:mboutin|Prof. Mireille Boutin]]. | Partly based on the [[2014_Spring_ECE_662_Boutin|ECE662 Spring 2014 lecture]] material of [[user:mboutin|Prof. Mireille Boutin]]. | ||

</center> | </center> | ||

| + | |||

| + | ---- | ||

| + | == '''Introduction''' == | ||

| + | |||

| + | ---- | ||

| + | == Bayes rule for minimizing risk == | ||

| + | |||

| + | ---- | ||

| + | == Example 1: 1D features == | ||

| + | |||

| + | ---- | ||

| + | == Example 2: 2D features == | ||

| + | |||

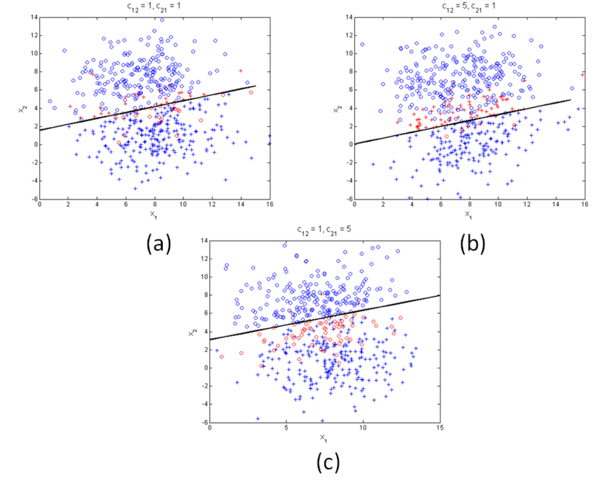

<center>[[Image:C12_c21.png|600px|thumb|left|Fig 1: Data for class 1 (crosses) and class 2 (circles). | <center>[[Image:C12_c21.png|600px|thumb|left|Fig 1: Data for class 1 (crosses) and class 2 (circles). | ||

| Line 26: | Line 39: | ||

---- | ---- | ||

| − | == | + | == Summary and Conclusions == |

| + | In this lecture we have shown that the probability of error ($Prob \left[ Error \right] $) when using Bayes error, is upper bounded by the Chernoff Bound. Therefore, | ||

| − | [ | + | <center><math>Prob \left[ Error \right] \leq \varepsilon_{\beta}</math></center> |

| + | for <math>\beta \in \left[ 0, 1 \right]</math>. | ||

| + | When <math>\beta =\frac{1}{2}</math> then <math>\varepsilon_{\frac{1}{2}}</math> in known as the Bhattacharyya bound. | ||

---- | ---- | ||

| + | |||

| + | == References == | ||

| + | |||

| + | [1]. Duda, Richard O. and Hart, Peter E. and Stork, David G., "Pattern Classication (2nd Edition)," Wiley-Interscience, 2000. | ||

| + | |||

| + | [2]. [https://engineering.purdue.edu/~mboutin/ Mireille Boutin], "ECE662: Statistical Pattern Recognition and Decision Making Processes," Purdue University, Spring 2014. | ||

| + | ---- | ||

| + | |||

| + | == Questions and comments== | ||

| + | |||

| + | If you have any questions, comments, etc. please post them On [[Upper_Bounds_for_Bayes_Error_Questions_and_comment|this page]]. | ||

Revision as of 14:53, 12 April 2014

Bayes Error for Minimizing Risk

Contents

Introduction

Bayes rule for minimizing risk

Example 1: 1D features

Example 2: 2D features

Fig 1: Data for class 1 (crosses) and class 2 (circles). In all cases, Prob($ \omega_1 $) = Prob($ \omega_2 $) = 0.5. Misclassified points are shown in red. Values of $ \mu_1 $, $ \mu_2 $, and $ \Sigma $ are given in Eqs. (------) - (----------). As the figures show, the separating hyperplanes shift depending on the values of $ c_{12} $ and $ c_{21} $.

Summary and Conclusions

In this lecture we have shown that the probability of error ($Prob \left[ Error \right] $) when using Bayes error, is upper bounded by the Chernoff Bound. Therefore,

for $ \beta \in \left[ 0, 1 \right] $.

When $ \beta =\frac{1}{2} $ then $ \varepsilon_{\frac{1}{2}} $ in known as the Bhattacharyya bound.

References

[1]. Duda, Richard O. and Hart, Peter E. and Stork, David G., "Pattern Classication (2nd Edition)," Wiley-Interscience, 2000.

[2]. Mireille Boutin, "ECE662: Statistical Pattern Recognition and Decision Making Processes," Purdue University, Spring 2014.

Questions and comments

If you have any questions, comments, etc. please post them On this page.