| Line 1: | Line 1: | ||

| + | =Lecture 22, [[ECE662]]: Decision Theory= | ||

| + | |||

| + | Lecture notes for [[ECE662:BoutinSpring08_Old_Kiwi|ECE662 Spring 2008]], Prof. [[user:mboutin|Boutin]]. | ||

| + | |||

| + | Other lectures: [[Lecture 1 - Introduction_Old Kiwi|1]], | ||

| + | [[Lecture 2 - Decision Hypersurfaces_Old Kiwi|2]], | ||

| + | [[Lecture 3 - Bayes classification_Old Kiwi|3]], | ||

| + | [[Lecture 4 - Bayes Classification_Old Kiwi|4]], | ||

| + | [[Lecture 5 - Discriminant Functions_Old Kiwi|5]], | ||

| + | [[Lecture 6 - Discriminant Functions_Old Kiwi|6]], | ||

| + | [[Lecture 7 - MLE and BPE_Old Kiwi|7]], | ||

| + | [[Lecture 8 - MLE, BPE and Linear Discriminant Functions_Old Kiwi|8]], | ||

| + | [[Lecture 9 - Linear Discriminant Functions_Old Kiwi|9]], | ||

| + | [[Lecture 10 - Batch Perceptron and Fisher Linear Discriminant_Old Kiwi|10]], | ||

| + | [[Lecture 11 - Fischer's Linear Discriminant again_Old Kiwi|11]], | ||

| + | [[Lecture 12 - Support Vector Machine and Quadratic Optimization Problem_Old Kiwi|12]], | ||

| + | [[Lecture 13 - Kernel function for SVMs and ANNs introduction_Old Kiwi|13]], | ||

| + | [[Lecture 14 - ANNs, Non-parametric Density Estimation (Parzen Window)_Old Kiwi|14]], | ||

| + | [[Lecture 15 - Parzen Window Method_Old Kiwi|15]], | ||

| + | [[Lecture 16 - Parzen Window Method and K-nearest Neighbor Density Estimate_Old Kiwi|16]], | ||

| + | [[Lecture 17 - Nearest Neighbors Clarification Rule and Metrics_Old Kiwi|17]], | ||

| + | [[Lecture 18 - Nearest Neighbors Clarification Rule and Metrics(Continued)_Old Kiwi|18]], | ||

| + | [[Lecture 19 - Nearest Neighbor Error Rates_Old Kiwi|19]], | ||

| + | [[Lecture 20 - Density Estimation using Series Expansion and Decision Trees_Old Kiwi|20]], | ||

| + | [[Lecture 21 - Decision Trees(Continued)_Old Kiwi|21]], | ||

| + | [[Lecture 22 - Decision Trees and Clustering_Old Kiwi|22]], | ||

| + | [[Lecture 23 - Spanning Trees_Old Kiwi|23]], | ||

| + | [[Lecture 24 - Clustering and Hierarchical Clustering_Old Kiwi|24]], | ||

| + | [[Lecture 25 - Clustering Algorithms_Old Kiwi|25]], | ||

| + | [[Lecture 26 - Statistical Clustering Methods_Old Kiwi|26]], | ||

| + | [[Lecture 27 - Clustering by finding valleys of densities_Old Kiwi|27]], | ||

| + | [[Lecture 28 - Final lecture_Old Kiwi|28]], | ||

| + | ---- | ||

| + | ---- | ||

| + | |||

Note: Most tree growing methods favor greatest impurity reduction near the root node. | Note: Most tree growing methods favor greatest impurity reduction near the root node. | ||

Latest revision as of 08:42, 17 January 2013

Contents

Lecture 22, ECE662: Decision Theory

Lecture notes for ECE662 Spring 2008, Prof. Boutin.

Other lectures: 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28,

Note: Most tree growing methods favor greatest impurity reduction near the root node.

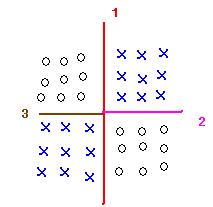

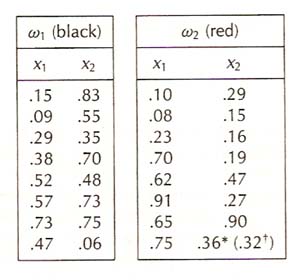

Example:

To assign category to a leaf node.

Easy! If sample data is pure -> assign this class to leaf. else -> assign the most frequent class.

Note: Problem of building decision tree is "ill-conditioned"

i.e. small variance in the training data can yield large variations in decision rules obtained.

Ex. p.405(Duda & Hart)

A small move of one sample data can change the decision rules a lot.

Clustering References

"Data clustering, a review," A.K. Jain, M.N. Murty, P.J. Flynn[1]

"Algorithms for clustering data," A.K. Jain, R.C. Dibes[2]

"Support vector clustering," Ben-Hur, Horn, Siegelmann, Vapnik [3]

"Dynamic cluster formation using level set methods," Yip, Ding, Chan[4]

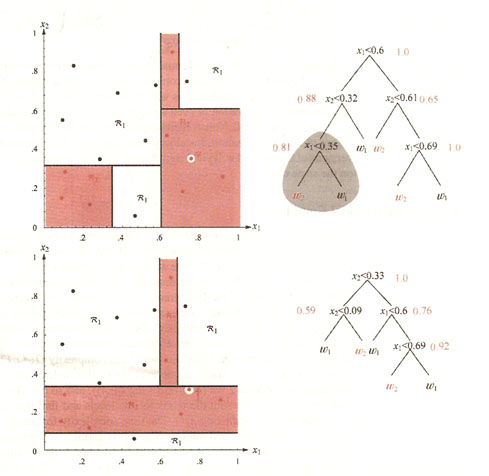

What is clustering?

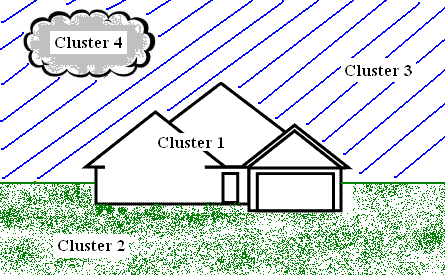

The task of finding "natural " groupings in a data set. Clustering is one of the most important unsupervised learning technique. Basically, it tries to find the structure of unlabeled data, that is, based on some distance measure, it tries to group the similar members. Here is a simple figure from [5]:

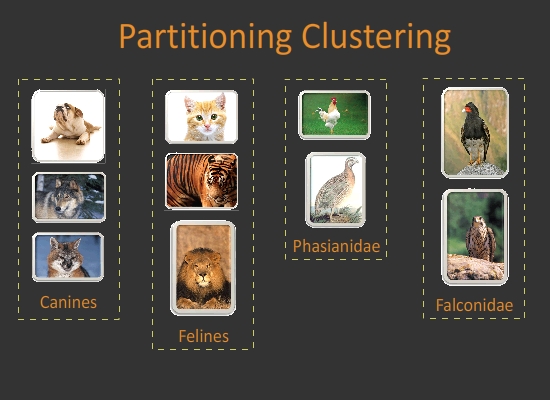

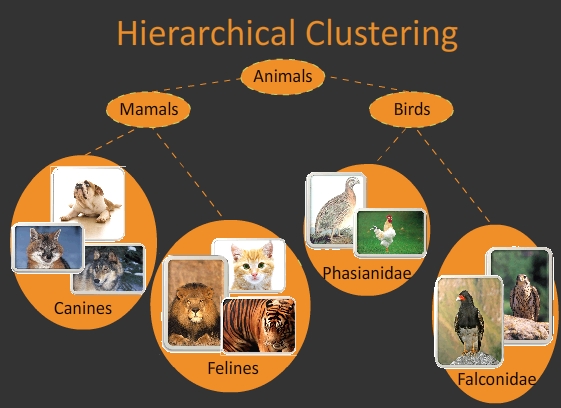

Clustering algorithms can also be classified as follows:

- Exclusive Clustering

- Overlapping Clustering

- Hierarchical Clustering

- Probabilistic Clustering

There are several clustering techniques, the most important ones are:\

- K-means Clustering(exclusive)

- Fuzzy C-means(Overlapping)

- Hierarchical clustering

- Mixture of Gaussians(Probabilistic)

Here are some useful links for getting information & source about each clustering techniques.

- http://home.dei.polimi.it/matteucc/Clustering/tutorial_html/index.html

- http://mvhs.shodor.org/mvhsproj/clustering/MatClust2.html

- http://www.lottolab.org/DavidCorney/ClusteringMatlab.html

Synonymons="unsupervised learning"

Clustering as a useful technique for searching in databases

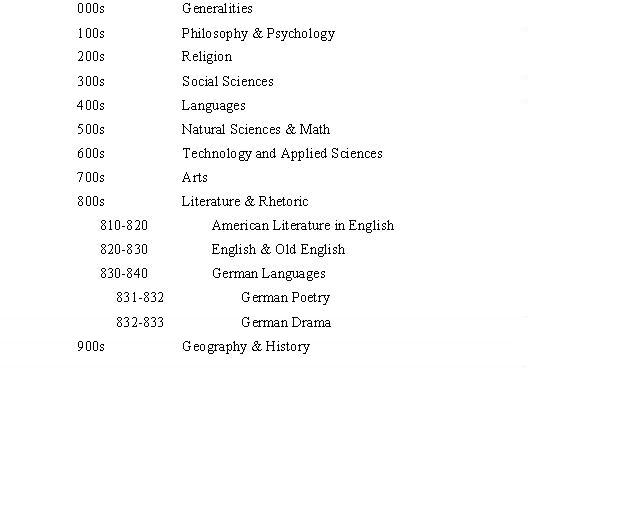

Clustering can be used to construct an index for a large dataset to be searched quickly.

- Definition: An index is a data structure that enables sub-linear time look up.

- Example: Dewey system to index books in a library

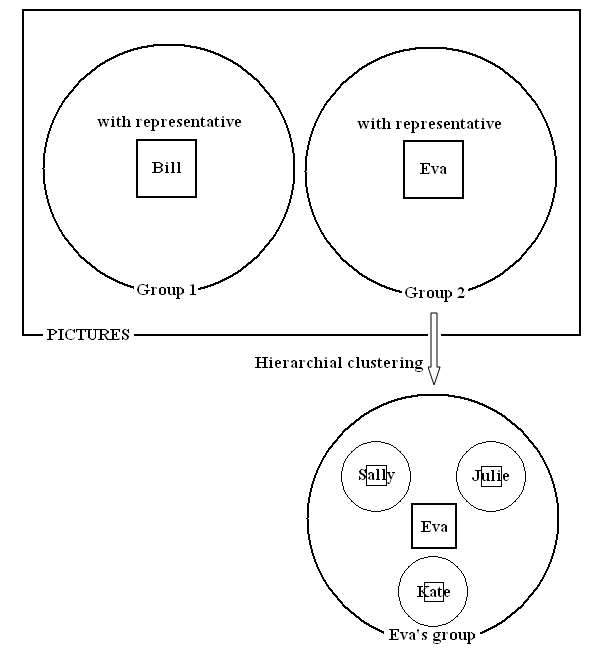

- Example of Index: Face Recognition

- need face images with label

- must cluster to obtain sub-linear search time

- Search will be faster because of $ \bigtriangleup $ inequality.

- Example: Image segmentation is a clustering problem

- dataset = pixels in image

- each cluster is an object in image

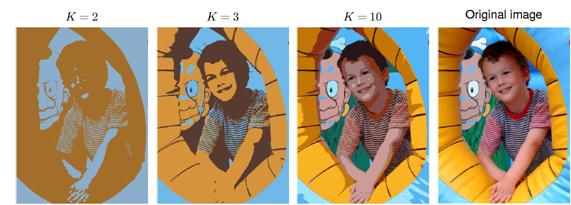

Here is another example for image segmentation and compression from [6]

As can be seen, there is a trade-off between compression and image quality when the clustering is considered. The clustering is based on the similarity of colors. The most common color values are chosen to be k-means. Larger values of k increases the image quality while compression rate decrease.

Input to a clustering algorithm is either

- distances between each pairs of objects in dataset

- feature vectors for each object in dataset