| Line 17: | Line 17: | ||

| − | === <br> 2. | + | === <br> 2. MLE as a Parametric Density Estimation === |

*Statistical Density Theory Context | *Statistical Density Theory Context | ||

**Given c classes + some knowledge about features <math>x \in \mathbb{R}^n</math> (or some other space) | **Given c classes + some knowledge about features <math>x \in \mathbb{R}^n</math> (or some other space) | ||

| Line 29: | Line 29: | ||

**Use <math>D</math> to estimate <math>\theta</math> | **Use <math>D</math> to estimate <math>\theta</math> | ||

| − | *The maximum likelihood estimate of <math>\theta</math> is the value <math>\hat{\theta}</math> that maximize <math>\rho_D(D|\theta)</math>, if x is continuous R.V., or <math>Prob(D|\theta)</math>, if x is discrete R.V. | + | *Definition: The maximum likelihood estimate of <math>\theta</math> is the value <math>\hat{\theta}</math> that maximize <math>\rho_D(D|\theta)</math>, if x is continuous R.V., or <math>Prob(D|\theta)</math>, if x is discrete R.V. |

| + | |||

| + | *Observation: By independence, <math>\rho(D|\theta)=\rho(x_1,x_2,...,x_N|\theta)</math> = <math>\prod\limits_{j=1}^n\rho(x_j|\theta)</math> | ||

| + | **Simple Example One: | ||

| + | Those to estimate the priors: <math>Prob(w_1), Prob(w_2)</math> for <math>c=2</math> classes. | ||

| + | |||

| + | Let <math>Prob(w_1)=P</math>, <math>\Rightarrow</math> <math>Prob(w_2)=1-P</math>, as an unknown parameter (<math>\theta=P</math>) | ||

| + | |||

| + | Let <math>w_j</math> be the class of some <math>x_j</math>, (<math>j\in{1,2,...N}</math>) | ||

| + | |||

| + | <math>Prob(D|P)</math> = <math>\prod\limits_{j=1}^n Prob(w_{ij}|P)</math>, <math>x\sim \rho(x)</math> | ||

| + | |||

| + | |||

| + | =<math>\prod\limits_{j=1}^{N_1} Prob(w_{ij}|P)\prod\limits_{j=1}^{N_2}Prob(w_{ij}|p)</math> | ||

| + | |||

| + | =<math>P^{N_1}\dot(1-P)^{N-N_1}</math> | ||

| + | |||

| + | , the first <math>w_{ij}=w_1</math> and the second <math>w_{ij}=w_2</math>, | ||

| + | |||

| + | <math>N1</math>= number of sample from class 1 | ||

| + | Then, we <math>\infty</math> differentiate P <math>(Prob(D|P))</math>, so local max is where derivative = 0. | ||

| + | |||

| + | <math>\frac{d}{dP} Prob(D|P)=\frac{d}{dP} P^{N_1}(1-P)^{N-N_1}</math> | ||

| + | |||

| + | <math>=N_1P^{N_1-1}(1-P)^{N-N_1}-(N-N_1)P^{N_1}(1-p)</math> | ||

| + | |||

| + | <math>=p^{N_1-1}(1-P)^{N-N_1-1}[N_1(1-P)-(N-N_1)P]=0</math> | ||

| + | |||

| + | <math>\Rightarrow</math> So either P=0 or P=1 <math>\rightarrow N_1(1-P) </math> | ||

| + | |||

| + | <math>\Leftrightarrow P=\frac{N_1}{N}</math> | ||

| + | |||

| + | |||

| + | |||

| + | |||

| − | |||

Revision as of 21:20, 5 May 2014

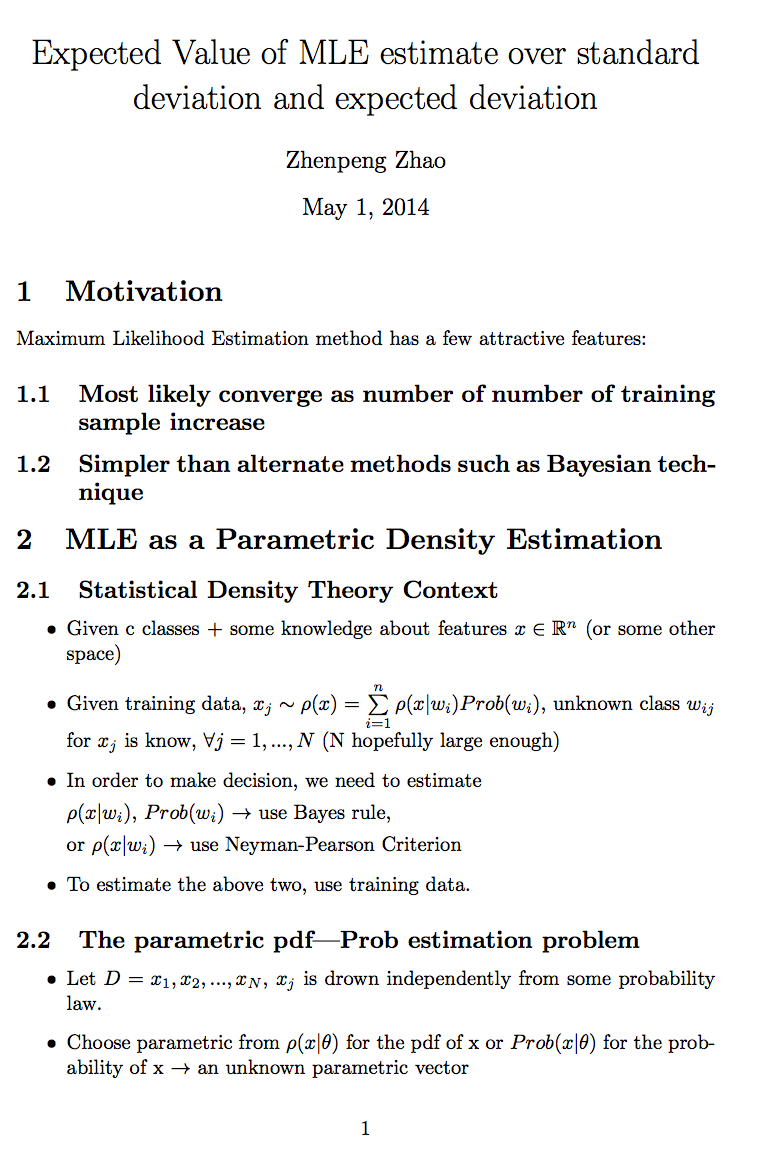

Expected Value of MLE estimate over standard deviation and expected deviation

A slecture by ECE student Zhenpeng Zhao

Partly based on the ECE662 Spring 2014 lecture material of Prof. Mireille Boutin.

1. Motivation

- Most likely converge as number of number of training sample increase.

- Simpler than alternate methods such as Bayesian technique.

2. MLE as a Parametric Density Estimation

- Statistical Density Theory Context

- Given c classes + some knowledge about features $ x \in \mathbb{R}^n $ (or some other space)

- Given training data, $ x_j\sim\rho(x)=\sum\limits_{i=1}^n\rho(x|w_i) Prob(w_i) $, unknown class $ w_{ij} $ for $ x_j $ is know, $ \forall{j}=1,...,N $ (N hopefully large enough)

- In order to make decision, we need to estimate $ \rho(x|w_i) $, $ Prob(w_i) $ $ \rightarrow $ use Bayes rule, or $ \rho(x|w_i) $ $ \rightarrow $ use Neyman-Pearson Criterion

- To estimate the above two, use training data.

- The parametric pdf|Prob estimation problem

- Let $ D={x_1,x_2,...,x_N} $, $ x_j $ is drown independently from some probability law.

- Choose parametric from $ \rho(x|\theta) $ for the pdf of x or $ Prob(x|\theta) $ for the probability of x $ \rightarrow $ an unknown parametric vector

- Use $ D $ to estimate $ \theta $

- Definition: The maximum likelihood estimate of $ \theta $ is the value $ \hat{\theta} $ that maximize $ \rho_D(D|\theta) $, if x is continuous R.V., or $ Prob(D|\theta) $, if x is discrete R.V.

- Observation: By independence, $ \rho(D|\theta)=\rho(x_1,x_2,...,x_N|\theta) $ = $ \prod\limits_{j=1}^n\rho(x_j|\theta) $

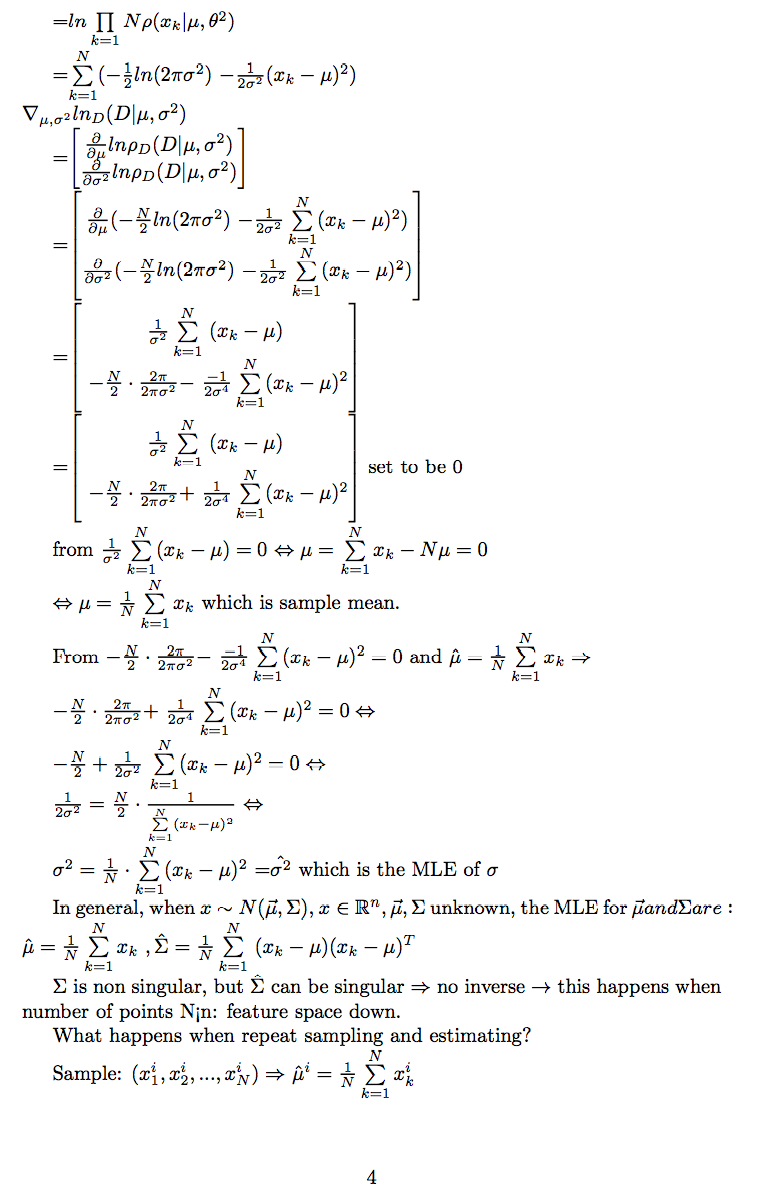

- Simple Example One:

Those to estimate the priors: $ Prob(w_1), Prob(w_2) $ for $ c=2 $ classes.

Let $ Prob(w_1)=P $, $ \Rightarrow $ $ Prob(w_2)=1-P $, as an unknown parameter ($ \theta=P $)

Let $ w_j $ be the class of some $ x_j $, ($ j\in{1,2,...N} $)

$ Prob(D|P) $ = $ \prod\limits_{j=1}^n Prob(w_{ij}|P) $, $ x\sim \rho(x) $

=$ \prod\limits_{j=1}^{N_1} Prob(w_{ij}|P)\prod\limits_{j=1}^{N_2}Prob(w_{ij}|p) $

=$ P^{N_1}\dot(1-P)^{N-N_1} $

, the first $ w_{ij}=w_1 $ and the second $ w_{ij}=w_2 $,

$ N1 $= number of sample from class 1 Then, we $ \infty $ differentiate P $ (Prob(D|P)) $, so local max is where derivative = 0.

$ \frac{d}{dP} Prob(D|P)=\frac{d}{dP} P^{N_1}(1-P)^{N-N_1} $

$ =N_1P^{N_1-1}(1-P)^{N-N_1}-(N-N_1)P^{N_1}(1-p) $

$ =p^{N_1-1}(1-P)^{N-N_1-1}[N_1(1-P)-(N-N_1)P]=0 $

$ \Rightarrow $ So either P=0 or P=1 $ \rightarrow N_1(1-P) $

$ \Leftrightarrow P=\frac{N_1}{N} $

(create a question page and put a link below)

Questions and comments

If you have any questions, comments, etc. please post them on https://kiwi.ecn.purdue.edu/rhea/index.php/ECE662Selecture_ZHenpengMLE_Ques.