Contents

[hide]K-Means Clustering Applications in Digital Signal Processing

by Sara Wendte

Introduction

K-means clustering is a simple unsupervised learning method. This method can be applied to implement color quantization in an image by finding clusters of pixel values. Another useful application would be automatic classification of phonemes in a speech signal by finding clusters of formant values for different speakers.

Theory

Clustering algorithms in general are used to group similar data points together. Data points that are similar are assigned a value that represents the average value of all points in that cluster. If additional data points are collected, they can be compared to the average values of other clusters and assigned to the closest one. K-means is an iterative algorithm that implements clustering by starting with randomly assigned centroid locations as the center of each cluster. In each iteration, the data points closest to each centroid are determined and the total error is calculated by adding up the total distance from each point to its corresponding centroid. Then, the centroids are adjusted until they are representative of each cluster and total error is minimized. Below is an illustration of this process taken from an online handout for a Stanford course on artificial intelligence.

Color Quantization Application

In the field of image processing, a common problem is determining how to display a color image on a device that can only display a limited number of colors without sacrificing much image quality. Color image are typically stored as three parallel matrices where each matrix represents the red, green, and blue components of the image. Each component can range from 0 to 255 which means that 256^3 colors can be represented. Since the human eye can't distinguish nearly that many unique colors, it makes sense to choose a limited amount of colors to represent a color image.

Using k-means, combinations of colors can be quantized into a certain number of levels. This works well because the human eye can't perceive the full color spectrum. In the context of k-means, these quantized color levels would be the centroids. For each pixel, the closest centroid is determined by treating each pixel as a vector of <r,g,b> and using the distance formula to find the distance between the pixel and each centroid. The algorithm assigns each centroid a color value that represents the average of all the pixels that were closest to that centroid.

The images below show the result of using k-means to quantize a color image. The image that is quantized with 256 levels is almost indistinguishable from the original. A link to the code I wrote to generate these images can be found at the bottom of this page.

| |

|

|

|

|---|---|---|---|

Phoneme Classification Application

An important aspect of speech recognition is classifying voiced phenomes. A phoneme is a specific sound (such as "long a" or "short a"). Voiced sounds (as opposed to unvoiced sounds) are produced by pushing air through the vocal tract. Different vowel sounds are created by modifying the shape of different parts of the vocal tract. Human learn how to do this naturally. This change in shape of the vocal tract amplifies and attenuates different frequencies of the speech signal. A phoneme can be classified by the first two frequencies that are amplified, known as formants.

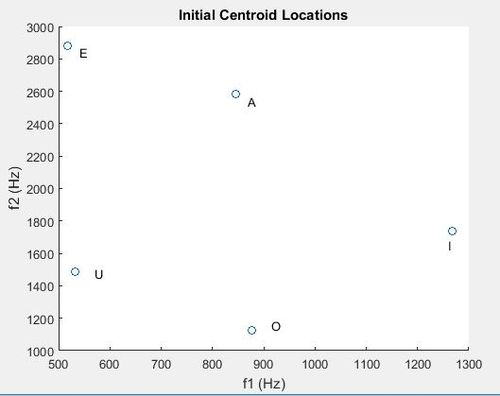

For this exercise, I focused on the first two formants of the recordings of vowel sounds provided in ECE438 Lab9a. Below is a scatter plot of the first two formants of these signals.

K-means can be applied in this situation if access to a large number of sound signals for each phoneme was available. The plot shown above would represent initial centroid locations. If a large dataset was inputted, the k-means algorithm could be implemented to find clusters of formant combinations. Formants will vary from person to person, but the average of each cluster would improve the accuracy of a speech recognition system. Of course there are many more phonemes in the English language, but this is an example of how k-means could be applied to speech processing. My code for implementing k-means in the context of signal processing is linked below.

References

"14.2-Clustering-KMeansAlgorithm- Machine Learning - Professor Andrew Ng",

https://www.youtube.com/watch?v=Ao2vnhelKhI.

Stanford CS 221,"K Means",

http://stanford.edu/~cpiech/cs221/handouts/kmeans.html.

Purdue ECE 438, "ECE438 - Laboratory 9: Speech Processing (Week 1)", October 6, 2010,

https://engineering.purdue.edu/VISE/ee438L/lab9/pdf/lab9a.pdf.

Code

Color Quantization: File:Kmeans color.pdf

Formant Classification: File:Kmeans formants.pdf