| Line 11: | Line 11: | ||

For constructing a decision tree, for a given classification problem, we have to answer these three questions | For constructing a decision tree, for a given classification problem, we have to answer these three questions | ||

| − | 1) Which question | + | 1) Which question should be asked at a given node -"Query Selection" |

2) When should we stop asking questions and declare the node to be a leaf -"When should we stop splitting" | 2) When should we stop asking questions and declare the node to be a leaf -"When should we stop splitting" | ||

| Line 27: | Line 27: | ||

Let <math> I </math> denote the impurity. Impurity can be defined in the following ways: | Let <math> I </math> denote the impurity. Impurity can be defined in the following ways: | ||

| − | + | '''Entropy Impurity''': | |

<math>I = \sum_{j}P(\omega _j)\log_2P(\omega _j)</math>, when priors are known, else approximate <math>P(\omega _j)</math> by | <math>I = \sum_{j}P(\omega _j)\log_2P(\omega _j)</math>, when priors are known, else approximate <math>P(\omega _j)</math> by | ||

<math>P(\omega _j) = \frac{\#\,of\,training\,patterns\,in\,\omega_j}{Total\,\#\,of\,training\,patterns}</math> | <math>P(\omega _j) = \frac{\#\,of\,training\,patterns\,in\,\omega_j}{Total\,\#\,of\,training\,patterns}</math> | ||

| − | + | '''Gini Impurity''' | |

<math>I = \sum_{i\ne j}P(\omega _i)P(\omega _j) = \frac{1}{2}[1- \sum_{j}P^2(\omega _j)</math> | <math>I = \sum_{i\ne j}P(\omega _i)P(\omega _j) = \frac{1}{2}[1- \sum_{j}P^2(\omega _j)</math> | ||

| Line 38: | Line 38: | ||

Ex: when C = 2, <math>I = P(\omega _1)P(\omega _2)</math> | Ex: when C = 2, <math>I = P(\omega _1)P(\omega _2)</math> | ||

| − | + | '''Misclassification Impurity''' | |

<math>I = 1-max P(\omega _j)</math> | <math>I = 1-max P(\omega _j)</math> | ||

defined as the "minimum probability that a training pattern is misclassified" | defined as the "minimum probability that a training pattern is misclassified" | ||

| + | |||

| + | |||

| + | Now let us look at each of the three questions in detail. | ||

| + | |||

| + | |||

| + | '''Query Selection''' | ||

Heuristically, want impurity to decrease from one node to its children. | Heuristically, want impurity to decrease from one node to its children. | ||

| Line 56: | Line 62: | ||

where <math>P_{L}</math> and <math>(1-P_{L})</math> are estimated with training patterns at node N. | where <math>P_{L}</math> and <math>(1-P_{L})</math> are estimated with training patterns at node N. | ||

| + | |||

| + | A query that miximizes <math>\triangle I</math> is "probably" a good one. But "finding the query that maximizes" is not a well defined question because we are not doing an exhaustive search over all the possible queries. Rather we narrow down to a set of few queries and find among them, which one maximizes <math>\triangle I</math>. | ||

| + | |||

| + | |||

| + | ''Example:'' | ||

| + | |||

| + | 1. look at separation hyperplane that miximizes <math>\triangle I(N)</math> | ||

| + | |||

| + | 2. look for a single feature threshold (colour or shape or taste) which would maximize <math>\triangle I(N)</math> | ||

| + | |||

| + | Query selection => numerical optimization problem. | ||

| + | |||

| + | |||

| + | '''When to stop splitting ?''' | ||

| + | |||

| + | Key: look for balance. | ||

| + | |||

| + | [[Image:Balance_OldKiwi.jpg]] | ||

| + | |||

| + | Need to construct a "balanced tree". Many ways to do this. | ||

| + | |||

| + | |||

| + | ''Example:'' | ||

| + | |||

| + | 1. Validation - train with 80% of training data, validate on 20%. Continue splitting until validation error is minimized. | ||

| + | |||

| + | 2. Thresholding - stop splitting when threshold <math>\beta</math> is small (but not too small) | ||

| + | |||

| + | <math>\beta=0.03</math> | ||

| + | |||

| + | |||

| + | '''Warning: "Horizon Effect"''' | ||

| + | |||

| + | Lack of looking ahead may cause us to stop splitting prematurely. | ||

| + | |||

| + | You should keep splitting for a bit, after you meet stopping criteria. | ||

| + | |||

| + | |||

| + | '''How to correct oversplitting?''' | ||

| + | |||

| + | Use "pruning" (or inverse splitting) which implies that take 2 leaves that have a common parent and merge them if "merging helps" i.e. | ||

| + | |||

| + | - if I either doesn't change or only increases a little bit. | ||

| + | |||

| + | - if validation error either stays the same or decreases. | ||

| + | |||

| + | Declare parent to be a leaf. | ||

| + | |||

| + | |||

| + | '''Pruning increases generalization.''' | ||

| + | |||

| + | |||

| + | '''Idea''': Look further than horizon but step back if it is not worth it. | ||

Revision as of 09:57, 2 April 2008

When the number of categories, c is big, decision tress are particularly good.

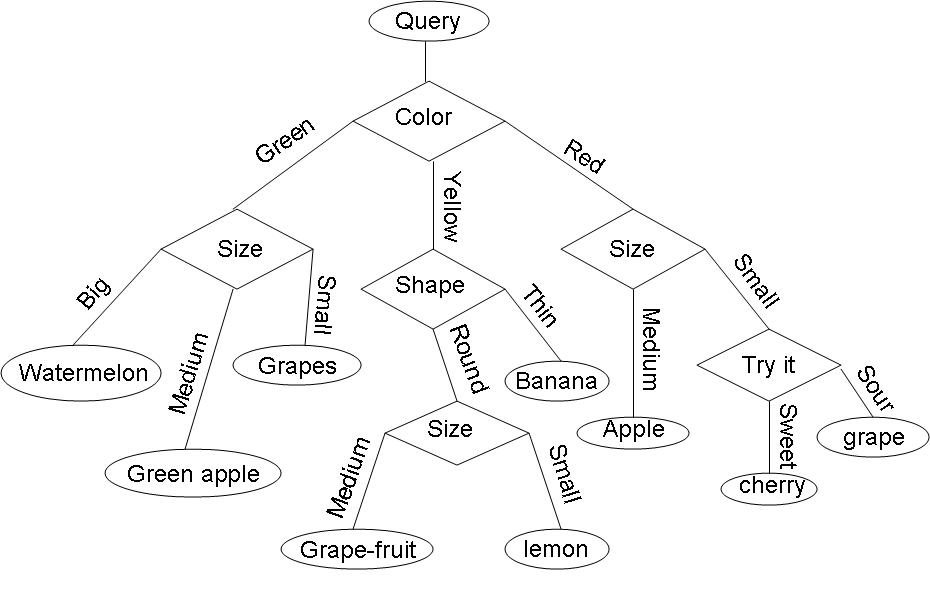

Example: Consider the query "Identify the fruit" from a set of c=7 categories {watermelon, apple, grape, lemon, grapefruit, banana, cherry} .

One possible decision tree based on simple queries is the following:

Three crucial questions to answer

For constructing a decision tree, for a given classification problem, we have to answer these three questions

1) Which question should be asked at a given node -"Query Selection"

2) When should we stop asking questions and declare the node to be a leaf -"When should we stop splitting"

3) Once a node is decided to be a leaf, what category should be assigned to this leaf -"Leaf classification"

We shall discuss questions 1 and 2 (3 being very trivial)

Need to define 'impurity' of a dataset such that $ impurity = 0 $ when all the training data belongs to one class.

Impurity is large when the training data contain equal percentages of each class

$ P(\omega _i) = \frac{1}{C} $; for all $ i $

Let $ I $ denote the impurity. Impurity can be defined in the following ways:

Entropy Impurity:

$ I = \sum_{j}P(\omega _j)\log_2P(\omega _j) $, when priors are known, else approximate $ P(\omega _j) $ by $ P(\omega _j) = \frac{\#\,of\,training\,patterns\,in\,\omega_j}{Total\,\#\,of\,training\,patterns} $

Gini Impurity

$ I = \sum_{i\ne j}P(\omega _i)P(\omega _j) = \frac{1}{2}[1- \sum_{j}P^2(\omega _j) $

Ex: when C = 2, $ I = P(\omega _1)P(\omega _2) $

Misclassification Impurity

$ I = 1-max P(\omega _j) $

defined as the "minimum probability that a training pattern is misclassified"

Now let us look at each of the three questions in detail.

Query Selection

Heuristically, want impurity to decrease from one node to its children.

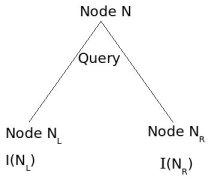

We assume that several training patterns are available at node N and they have a good mix of all different classes.

I(N) := impurity at node N.

Define impurity drop at node N as: $ \triangle I=I(N)-P_{L}I(N_{L})-(1-P_{L})I(N_{R}) $

where $ P_{L} $ and $ (1-P_{L}) $ are estimated with training patterns at node N.

A query that miximizes $ \triangle I $ is "probably" a good one. But "finding the query that maximizes" is not a well defined question because we are not doing an exhaustive search over all the possible queries. Rather we narrow down to a set of few queries and find among them, which one maximizes $ \triangle I $.

Example:

1. look at separation hyperplane that miximizes $ \triangle I(N) $

2. look for a single feature threshold (colour or shape or taste) which would maximize $ \triangle I(N) $

Query selection => numerical optimization problem.

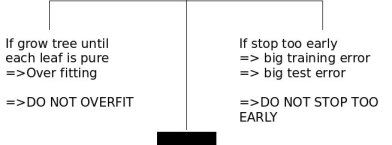

When to stop splitting ?

Key: look for balance.

Need to construct a "balanced tree". Many ways to do this.

Example:

1. Validation - train with 80% of training data, validate on 20%. Continue splitting until validation error is minimized.

2. Thresholding - stop splitting when threshold $ \beta $ is small (but not too small)

$ \beta=0.03 $

Warning: "Horizon Effect"

Lack of looking ahead may cause us to stop splitting prematurely.

You should keep splitting for a bit, after you meet stopping criteria.

How to correct oversplitting?

Use "pruning" (or inverse splitting) which implies that take 2 leaves that have a common parent and merge them if "merging helps" i.e.

- if I either doesn't change or only increases a little bit.

- if validation error either stays the same or decreases.

Declare parent to be a leaf.

Pruning increases generalization.

Idea: Look further than horizon but step back if it is not worth it.