Support Vector Machines

(Continued from Lecture 11)

- Definition

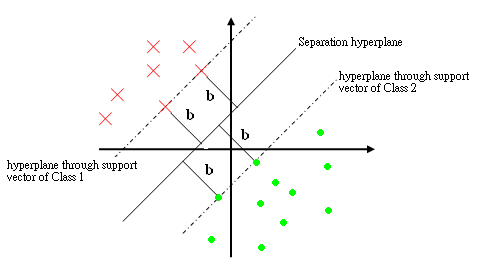

The support vectors are the training points $ y_i $ such that $ \vec{c}\cdot{y_i}=b,\forall{i} $. i.e. they are the closest to the hyperplane.

- How to Train a Support Vector Machine (SVM)

We want to find a $ \vec{c} $ such that $ \vec{c}\cdot{y_i} \geq b, \forall{i} $. This however, is wishful thinking, so we try to find this for as many training samples as possible with $ b $ as large as possible.

Observe: If $ \vec{c} $ is a solution with margin $ b $, then $ \alpha\vec{c} $ is a solution with margin $ \alpha b, \forall{\alpha} \in \Re > 0 $

So to pose the problem well, we demand that $ \vec{c}\cdot{y_i} \geq 1, '\forall{i}' $ and try to minimize $ \vec{c} $