| Line 20: | Line 20: | ||

Your actual image of the point input however, will be reproduced as something other that a single pixel. This is the output of your imaging system. Let the output of the system be <math>g(x,y)</math>. | Your actual image of the point input however, will be reproduced as something other that a single pixel. This is the output of your imaging system. Let the output of the system be <math>g(x,y)</math>. | ||

| − | + | Let us assume, for simplicity, that your system is space-invariant. This means that input impulses at different <math>x, y</math> co-ordinates yield the same PSF, <math>h(x,y)</math>. The image you form on the focal plane array is given by the convolution of the ideal image you should have formed with the PSF of the system. | |

| − | system. | + | |

<math> | <math> | ||

| Line 30: | Line 29: | ||

</math> | </math> | ||

| − | So if you take away the magnification factor, the resulting image is like the convolution of what the image should have been with the PSF of the system. | + | So if you take away the magnification factor (or assume that <math>|M| = 1</math>), the resulting image is like the convolution of what the image should have been with the PSF of the system. |

Alternatively you could define the function | Alternatively you could define the function | ||

| Line 40: | Line 39: | ||

<math> g(x,y) = \frac{1}{M^{2}} h(x,y)* \tilde{f} (x,y)</math> | <math> g(x,y) = \frac{1}{M^{2}} h(x,y)* \tilde{f} (x,y)</math> | ||

| − | Real imaging systems are not perfectly space invariant. | + | Real imaging systems are not perfectly space invariant. Consequently, as you move around on the image plain the PSF will vary. You can observe this if you go out on a starry evening and place a camera on a tripod and look at some of the starts in the sky. The stars are like perfect point sources. The image you capture with your camera will have points on it but if you zoom in on a point using your computer, you will notice that the image is not really a point; it will be a blur spanning a cluster of pixels. If you use different apertures, you will also notice that for larger f-stop, you get a bigger blur and vice versa. |

So now you have essentially put the delta function, <math>\delta (x,y)</math>, through your system to obtain the PSF because the star is like a delta function. If you take its Fourier transform, you will get the frequency response of the system. Given that your system is space-variant, you will notice that stars close to the edge of your photo produce PSFs that are different from those of stars near the center of you the photo. | So now you have essentially put the delta function, <math>\delta (x,y)</math>, through your system to obtain the PSF because the star is like a delta function. If you take its Fourier transform, you will get the frequency response of the system. Given that your system is space-variant, you will notice that stars close to the edge of your photo produce PSFs that are different from those of stars near the center of you the photo. | ||

Revision as of 18:33, 2 April 2013

Space Domain Models for Optical Imaging Systems

keyword: ECE 637, digital image processing

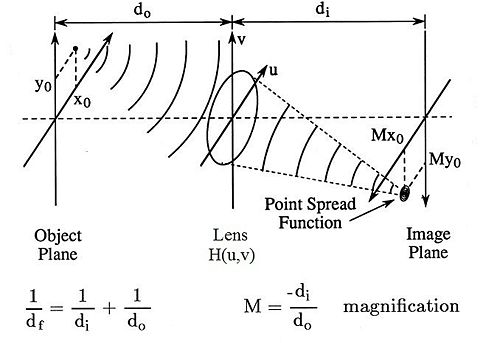

Imaging systems are well approximated by linear space invariant systems theory, which is why we will use it to describe imaging systems.

We can characterize the lens for a given aperture by its impulse response. Its Point Spread Function (PSF) is analogous to its impulse response since the PSF describes the system's response to a point input (think about a point input as $ \delta (x,y) $).

The PSF will be denoted by the function $ h(x,y) $ in the space domain. Its CSFT will be given by $ H(u,v) $.

Ideally, a point input, would be represented in an image as a single pixel. Let this ideal image be $ f(x,y) $. Consider this to be the input to your imaging system.

Your actual image of the point input however, will be reproduced as something other that a single pixel. This is the output of your imaging system. Let the output of the system be $ g(x,y) $.

Let us assume, for simplicity, that your system is space-invariant. This means that input impulses at different $ x, y $ co-ordinates yield the same PSF, $ h(x,y) $. The image you form on the focal plane array is given by the convolution of the ideal image you should have formed with the PSF of the system.

$ \begin{align} g(x,y) &= \int_{-\infty}^{\infty}\int_{-\infty}^{\infty}f(\xi,\eta)h(x-M\xi,y-M\eta)d\xi d\eta \\ &= \frac{1}{M^2} \int_{-\infty}^{\infty}\int_{-\infty}^{\infty}f(\frac{\xi}{M},\frac{\eta}{M})h(x-\xi,y-\eta)d\xi d\eta \end{align} $

So if you take away the magnification factor (or assume that $ |M| = 1 $), the resulting image is like the convolution of what the image should have been with the PSF of the system.

Alternatively you could define the function

$ \tilde{f}(x,y) := f(\frac{\xi}{M},\frac{\eta}{M}) $

Then the imaging system acts like a 2-D convolution where

$ g(x,y) = \frac{1}{M^{2}} h(x,y)* \tilde{f} (x,y) $

Real imaging systems are not perfectly space invariant. Consequently, as you move around on the image plain the PSF will vary. You can observe this if you go out on a starry evening and place a camera on a tripod and look at some of the starts in the sky. The stars are like perfect point sources. The image you capture with your camera will have points on it but if you zoom in on a point using your computer, you will notice that the image is not really a point; it will be a blur spanning a cluster of pixels. If you use different apertures, you will also notice that for larger f-stop, you get a bigger blur and vice versa.

So now you have essentially put the delta function, $ \delta (x,y) $, through your system to obtain the PSF because the star is like a delta function. If you take its Fourier transform, you will get the frequency response of the system. Given that your system is space-variant, you will notice that stars close to the edge of your photo produce PSFs that are different from those of stars near the center of you the photo.

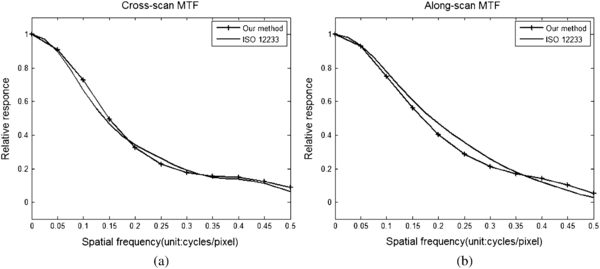

At this point, let's define two other functions to characterize the performance of an imaging system. People are often interested in the magnitude of $ H(u,v) $ normalized by $ H(0,0) $. This function is called the Modular Transfer Function (MTF) of the system. It is the absolute value of the Optical Transfer Function (OTF) for the system. So we have that

$ \begin{align} OTF &= \frac{H(u,v)}{H(0,0)} \\ \Rightarrow MTF &= |\frac{H(u,v)}{H(0,0)}| \end{align} $

Recall that

$ H(0,0) = \int_{-\infty}^{\infty} h(x,y) dxdy $

Figure 4 shows the one-sided MTFs for an imaging system along the individual axes in the frequency domain. The units on the horizontal axes are in cycles per pixel but they are also often measured in cycles per inch. MTFs may be quoted in terms of their 3 dB cutoff frequencies. A system with a wider MTF will have higher resolution since it can pass higher frequencies corresponding to a narrow PSF function in the space domain (less blur). Conversely, systems with narrow MTF functions will have lower resolution corresponding to a wider PSF function in the space domain (blurry image).

References

- Bouman, Charles. Digital Image Processing I. Purdue University. Spring 2013. Lecture.

- Allebach, Jan. Digital Signal Processing. Purdue University. Legacy Lecture Notes on ECE 438.

- Guo, Lingling; Wu, Zepeng; Zhang, Liguo; Ren Jianyue. New Approach to Measure the On-Orbit Point Spread Function for Spaceborne Imagers. Mar 04, 2013. Article.

Questions and Comments

If you have any questions, comments, etc. please post them below

- Comment/question 1