(New page: Category:ECE600 Category:Lecture notes <center><font size= 4> '''Random Variables and Signals''' </font size> <font size= 3> Topic 13: Functions of Two Random Variables</font siz...) |

m (Protected "ECE600 F13 Functions of Two Random Variables mhossain" [edit=sysop:move=sysop]) |

||

| (15 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[[Category:ECE600]] | [[Category:ECE600]] | ||

[[Category:Lecture notes]] | [[Category:Lecture notes]] | ||

| + | [[ECE600_F13_notes_mhossain|Back to all ECE 600 notes]] | ||

| + | [[Category:ECE600]] | ||

| + | [[Category:probability]] | ||

| + | [[Category:lecture notes]] | ||

| + | [[Category:slecture]] | ||

<center><font size= 4> | <center><font size= 4> | ||

| − | '''Random Variables and Signals''' | + | [[ECE600_F13_notes_mhossain|'''The Comer Lectures on Random Variables and Signals''']] |

</font size> | </font size> | ||

| + | |||

| + | [https://www.projectrhea.org/learning/slectures.php Slectures] by [[user:Mhossain | Maliha Hossain]] | ||

| + | |||

<font size= 3> Topic 13: Functions of Two Random Variables</font size> | <font size= 3> Topic 13: Functions of Two Random Variables</font size> | ||

</center> | </center> | ||

| − | |||

| + | ---- | ||

---- | ---- | ||

| Line 16: | Line 24: | ||

Given random variables X and Y and a function g:'''R'''<math>^2</math>→'''R''', let Z = g(X,Y). What is f<math>_Z</math>(z)? | Given random variables X and Y and a function g:'''R'''<math>^2</math>→'''R''', let Z = g(X,Y). What is f<math>_Z</math>(z)? | ||

| − | We assume that Z is a valid random variable, so that ∀z ∈ '''R''', there is a D<math>_z</math> ∈ | + | We assume that Z is a valid random variable, so that ∀z ∈ '''R''', there is a D<math>_z</math> ∈ B('''R'''<math>^2</math>) such that <br/> |

<center><math>\{Z\leq z\} = \{(X,Y)\in D_z\}</math></center> | <center><math>\{Z\leq z\} = \{(X,Y)\in D_z\}</math></center> | ||

i.e.<br/> | i.e.<br/> | ||

| Line 26: | Line 34: | ||

'''Example''' <math>\qquad</math> g(x,y) = x + y. So Z = X + Y. Here, <br/> | '''Example''' <math>\qquad</math> g(x,y) = x + y. So Z = X + Y. Here, <br/> | ||

| − | <center><math>D_z = \{(x,y)\in\ | + | <center><math>D_z = \{(x,y)\in\mathbb R^2:x+y\leq z\}</math><br/> |

and<br/> | and<br/> | ||

<math>F_Z(z) = \int_{-\infty}^{\infty}\int_{-\infty}^{z-x}f_{XY}(x,y)dydx</math></center> | <math>F_Z(z) = \int_{-\infty}^{\infty}\int_{-\infty}^{z-x}f_{XY}(x,y)dydx</math></center> | ||

| − | <center>[[Image:fig1_functions_of_two_rvs.png| | + | <center>[[Image:fig1_functions_of_two_rvs.png|500px|thumb|left|Fig 1: The shaded region indicates D<math>_z</math>.]]</center> |

| Line 42: | Line 50: | ||

Then, <br/> | Then, <br/> | ||

<center><math>\begin{align} | <center><math>\begin{align} | ||

| − | f_Z(z) &= \frac{d}{dz}[\int_{-\infty}^{\infty}f_X(x)F_Y(z-x)dx] \\ | + | f_Z(z) &= \frac{d}{dz}\left[\int_{-\infty}^{\infty}f_X(x)F_Y(z-x)dx\right] \\ |

&=\int_{-\infty}^{\infty}f_X(x)\frac{dF_Y(z-x)}{dz}dx \\ | &=\int_{-\infty}^{\infty}f_X(x)\frac{dF_Y(z-x)}{dz}dx \\ | ||

&=\int_{-\infty}^{\infty}f_X(x)f_Y(z-x)dx \\ | &=\int_{-\infty}^{\infty}f_X(x)f_Y(z-x)dx \\ | ||

| Line 48: | Line 56: | ||

\end{align}</math></center> | \end{align}</math></center> | ||

| − | So if X and Y are independent, and Z = X + Y, we can find f<math>_Z</math> by convolving f<math>_X,/math> and f<math>_Y</math>. | + | So if X and Y are independent, and Z = X + Y, we can find f<math>_Z</math> by convolving f<math>_X</math> and f<math>_Y</math>. |

| + | |||

| + | '''Example''' <math>\qquad</math> X and Y are independent exponential random variables with means <math>\mu_X</math> = <math>\mu_Y</math> = <math>\mu</math>. So,<br/> | ||

| + | <center><math>\begin{align} | ||

| + | f_Z(z)&=\int_{-\infty}^{\infty}\frac{1}{\mu}e^{-\frac{x}{\mu}}u(x)\frac{1}{\mu}e^{-\frac{(z-x)}{\mu}}u(z-x)dx \\ | ||

| + | &=\int_0^z\frac{1}{\mu^2}e^{-\frac{z}{\mu}}dx\;\;\mbox{for}\;z=0 \\ | ||

| + | &=\frac{z}{\mu^2}e^{-\frac{z}{\mu}}u(z) | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | So the sum of two independent exponential random variables is not an exponential random variable. | ||

| + | |||

| + | |||

| + | ---- | ||

| + | |||

| + | ==Two Functions of Two Random Variables== | ||

| + | |||

| + | Given two random variables X and Y, cobsider random variables Z and W defined as follows: <br/> | ||

| + | <center><math>\begin{align} | ||

| + | Z &= g(X,Y) \\ | ||

| + | W &= h(X,Y) | ||

| + | \end{align}</math></center> | ||

| + | where g:'''R'''<math>^2</math>→'''R''' and h:'''R'''<math>^2</math>→'''R'''. | ||

| + | |||

| + | For example, if we have a linear transformation, then <br/> | ||

| + | <center><math>\begin{bmatrix}Z\\W\end{bmatrix} =\mathbf A \begin{bmatrix}X\\Y\end{bmatrix}</math></center> | ||

| + | where '''A''' is a 2x2 matrix. In this case, <br/> | ||

| + | <center><math>\begin{align} | ||

| + | g(x,y) &= a_{11}x+a_{12}y \\ | ||

| + | h(x,y) &= a_{21}x+a_{22}y | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | where <br/> | ||

| + | <center><math>\mathbf A=\begin{bmatrix} | ||

| + | a_{11} & a_{12} \\ | ||

| + | a_{21} & a_{22} | ||

| + | \end{bmatrix}</math></center> | ||

| + | |||

| + | How do we find f<math>_{ZW}</math>? | ||

| + | |||

| + | If we could find D<math>_{z,w}</math> = {(x,y) ∈ '''R'''<math>^2</math>: g(x,y) ≤ z and h(x,y) ≤ w} for every (z,w) ∈ '''R'''<math>^2</math>, then we could compute <br/> | ||

| + | <center><math>\begin{align} | ||

| + | F_{ZW}(z,w) &= p(Z\leq z, W\leq w) \\ | ||

| + | &=P((X,Y)\in D_{z,w}) \\ | ||

| + | &=\int\int_{D_{z,w}}f_{XY}(x,y)dxdy | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | It is often quite difficult to find D<math>_{z,w}</math>, so we use a formula for f<math>_{ZW}</math> instead. | ||

| + | |||

| + | |||

| + | ==Formula for Joint pdf f<math>_{ZW}</math>== | ||

| + | |||

| + | Assume that the functions g and h satisfy | ||

| + | * z = g(x,y) and w = h(x,y) can be solved simultaneously for unique x and y. We will write x = g<math>^{-1}</math>(z,w) and y = h<math>^{-1}</math>(z,w) where g<math>^{-1}</math>:'''R'''<math>^2</math>→'''R''' and h<math>^{-1}</math>:'''R'''<math>^2</math>→'''R'''. | ||

| + | :For the linear transformation example, this means we assume '''A'''<math>^{-1}</math> exists (i.e. it is invertible). In this case <br/> | ||

| + | <center><math>\begin{align} | ||

| + | g^{-1}(z,w) &= b_{11}z+b_{12}w \\ | ||

| + | h^{-1}(z,w) &= b_{21}z+b_{22}w | ||

| + | \end{align}</math></center> | ||

| + | :where <br/> | ||

| + | <center><math>\mathbf A^{-1}=\begin{bmatrix} | ||

| + | b_{11} & b_{12} \\ | ||

| + | b_{21} & b_{22} | ||

| + | \end{bmatrix}</math></center> | ||

| + | *The partial derivatives <br/> | ||

| + | <center><math>\frac{\partial x}{\partial z},\;\frac{\partial x}{\partial w},\;\frac{\partial y}{\partial z},\;\frac{\partial y}{\partial w},\;\frac{\partial z}{\partial x},\;\frac{\partial z}{\partial y},\;\frac{\partial w}{\partial x},\;\frac{\partial w}{\partial y}</math></center> | ||

| + | :exist. | ||

| + | |||

| + | Then it can be shown that <br/> | ||

| + | <center><math>f_{ZW}(z,w) = \frac{f_{XY}(g^{-1}(z,w),h^{-1}(z,w))}{\left|\frac{\partial\left(z,w\right)}{\partial\left(x,y\right)}\right|}</math></center> | ||

| + | |||

| + | where<br/> | ||

| + | <center><math> | ||

| + | \begin{align} | ||

| + | \left|\frac{\partial(z,w)}{\partial(x,y)}\right| &\equiv | ||

| + | \left|\mbox{det}\begin{bmatrix} | ||

| + | \frac{\partial z}{\partial x} & \frac{\partial z}{\partial y} \\ | ||

| + | \frac{\partial w}{\partial x} & \frac{\partial w}{\partial y} \end{bmatrix}\right| \\ | ||

| + | &= \left|\frac{\partial z}{\partial x}\frac{\partial w}{\partial y}-\frac{\partial z}{\partial y}\frac{\partial w}{\partial x} \right| | ||

| + | \end{align}</math></center> | ||

| + | <center><math>z=g(x,y)\qquad w=h(x,y)</math></center> | ||

| + | |||

| + | Note that we take the absolute value of the determinant. This is called the Jacobian of the transformation. For more on the Jacobian, see [[Jacobian|here]]. | ||

| + | |||

| + | The proof for the above formula is in Papoulis. | ||

| + | |||

| + | |||

| + | '''Example''' X and Y are iid (independent and identically distributed) Gaussian random variables with <math>\mu_X=\mu_Y=\mu = 0</math>, <math>\sigma^2</math><math>_X</math>=<math>\sigma^2</math><math>_Y</math>=<math>\sigma^2</math> and r = 0. Then, <br/> | ||

| + | <center><math>f_{XY}(x,y)=\frac{1}{2\pi\sigma^2}e^{-\frac{x^2+y^2}{2\sigma^2}}</math></center> | ||

| + | Let<br/> | ||

| + | <center><math>R = \sqrt{X^2+Y^2}\qquad \Theta=\tan^{-1}\left(\frac{Y}{X}\right)</math></center> | ||

| + | |||

| + | So, <br/> | ||

| + | <center><math>\begin{align} | ||

| + | g(x,y) &= \sqrt{x^2+y^2} \\ | ||

| + | h(x,y) &= \tan^{-1}\left(\frac{y}{x}\right) \\ | ||

| + | g^{-1}(r,\theta)&=r\cos\theta \\ | ||

| + | h^{-1}(r,\theta)&=r\sin\theta | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | Now use <br/> | ||

| + | <center><math>f_{R\Theta}(r,\theta)=f_{XY}(g^{-1}(r,\theta),h^{-1}(r,\theta))\left|\frac{\partial(x,y)}{\partial(r,\theta)}\right|</math></center> | ||

| + | |||

| + | where <br/> | ||

| + | <center><math>\begin{align} | ||

| + | \left|\frac{\partial(x,y)}{\partial(r,\theta)}\right| &= \left|\mbox{det}\begin{bmatrix} | ||

| + | \frac{\partial x}{\partial r} & \frac{\partial x}{\partial\theta} \\ | ||

| + | \frac{\partial y}{\partial r} & \frac{\partial y}{\partial\theta} | ||

| + | \end{bmatrix}\right| \\ | ||

| + | \\ | ||

| + | &=\left|\mbox{det}\begin{bmatrix} | ||

| + | \cos\theta & -r\sin\theta \\ | ||

| + | \sin\theta & r\cos\theta | ||

| + | \end{bmatrix}\right| \\ | ||

| + | \\ | ||

| + | &=|r\cos^2\theta+r\sin^2\theta| \\ | ||

| + | &=r | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | |||

| + | <center><math>\begin{align} | ||

| + | f_{R\Theta}(r,\theta) &=\frac{1}{2\pi\sigma^2}e^{-\frac{r^2\cos^2\theta+r^2\sin^2\theta}{2\sigma^2}}r\cdot u(r) \\ | ||

| + | \\ | ||

| + | &=\frac{r}{2\pi\sigma^2}e^{-\frac{r^2}{2\sigma^2}}u(r)\qquad -\pi\leq\theta\leq\pi | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | We can then find the pdf of R from <br/> | ||

| + | <center><math>\begin{align} | ||

| + | f_R(r) &= \int_{-\infty}^{\infty}f_{R,\Theta}(r,\theta)d\theta \\ | ||

| + | &= \frac{r}{\sigma^2}e^{-\frac{r^2}{2\sigma^2}}u(r) | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | This is the '''Rayleigh''' pdf. | ||

| + | |||

| + | |||

| + | ---- | ||

| + | |||

| + | ==Auxiliary Variables== | ||

| + | |||

| + | If Z=g(X,Y), we can define another random variable W, find f<math>_{ZW}</math>, and then integrate to get f<math>_Z</math>. We call W an "auxiliary" or "dummy" variable. We should select h(x,y) so that the solution for f<math>_Z</math> is as simple as possible when we let W=h(X,Y). For the polar coordinate problem in the previous example, h(x,y) = tan<math>^{-1}</math>(y/x) works well for finding f<math>_R</math>. Often, W = X works well since <br/> | ||

| + | <center><math> | ||

| + | \frac{\delta w}{\delta x}=1 \qquad | ||

| + | \frac{\delta y}{\delta w}=0 | ||

| + | </math></center> | ||

| + | |||

| + | |||

| + | ---- | ||

| + | |||

| + | == References == | ||

| + | |||

| + | * [https://engineering.purdue.edu/~comerm/ M. Comer]. ECE 600. Class Lecture. [https://engineering.purdue.edu/~comerm/600 Random Variables and Signals]. Faculty of Electrical Engineering, Purdue University. Fall 2013. | ||

| + | |||

| + | |||

| + | ---- | ||

| + | |||

| + | ==[[Talk:ECE600_F13_Functions_of_Two_Random_Variables_mhossain|Questions and comments]]== | ||

| + | |||

| + | If you have any questions, comments, etc. please post them on [[Talk:ECE600_F13_Functions_of_Two_Random_Variables_mhossain|this page]] | ||

| + | |||

| + | |||

| + | ---- | ||

| − | + | [[ECE600_F13_notes_mhossain|Back to all ECE 600 notes]] | |

Latest revision as of 12:12, 21 May 2014

The Comer Lectures on Random Variables and Signals

Topic 13: Functions of Two Random Variables

Contents

One Function of Two Random Variables

Given random variables X and Y and a function g:R$ ^2 $→R, let Z = g(X,Y). What is f$ _Z $(z)?

We assume that Z is a valid random variable, so that ∀z ∈ R, there is a D$ _z $ ∈ B(R$ ^2 $) such that

i.e.

Then,

and we can find f$ _Z $ from this.

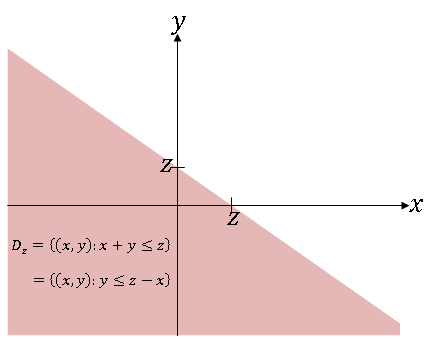

Example $ \qquad $ g(x,y) = x + y. So Z = X + Y. Here,

and

If we now assume that X and Y are independent, then

Then,

So if X and Y are independent, and Z = X + Y, we can find f$ _Z $ by convolving f$ _X $ and f$ _Y $.

Example $ \qquad $ X and Y are independent exponential random variables with means $ \mu_X $ = $ \mu_Y $ = $ \mu $. So,

So the sum of two independent exponential random variables is not an exponential random variable.

Two Functions of Two Random Variables

Given two random variables X and Y, cobsider random variables Z and W defined as follows:

where g:R$ ^2 $→R and h:R$ ^2 $→R.

For example, if we have a linear transformation, then

where A is a 2x2 matrix. In this case,

where

How do we find f$ _{ZW} $?

If we could find D$ _{z,w} $ = {(x,y) ∈ R$ ^2 $: g(x,y) ≤ z and h(x,y) ≤ w} for every (z,w) ∈ R$ ^2 $, then we could compute

It is often quite difficult to find D$ _{z,w} $, so we use a formula for f$ _{ZW} $ instead.

Formula for Joint pdf f$ _{ZW} $

Assume that the functions g and h satisfy

- z = g(x,y) and w = h(x,y) can be solved simultaneously for unique x and y. We will write x = g$ ^{-1} $(z,w) and y = h$ ^{-1} $(z,w) where g$ ^{-1} $:R$ ^2 $→R and h$ ^{-1} $:R$ ^2 $→R.

- For the linear transformation example, this means we assume A$ ^{-1} $ exists (i.e. it is invertible). In this case

- where

- The partial derivatives

- exist.

Then it can be shown that

where

Note that we take the absolute value of the determinant. This is called the Jacobian of the transformation. For more on the Jacobian, see here.

The proof for the above formula is in Papoulis.

Example X and Y are iid (independent and identically distributed) Gaussian random variables with $ \mu_X=\mu_Y=\mu = 0 $, $ \sigma^2 $$ _X $=$ \sigma^2 $$ _Y $=$ \sigma^2 $ and r = 0. Then,

Let

So,

Now use

where

We can then find the pdf of R from

This is the Rayleigh pdf.

Auxiliary Variables

If Z=g(X,Y), we can define another random variable W, find f$ _{ZW} $, and then integrate to get f$ _Z $. We call W an "auxiliary" or "dummy" variable. We should select h(x,y) so that the solution for f$ _Z $ is as simple as possible when we let W=h(X,Y). For the polar coordinate problem in the previous example, h(x,y) = tan$ ^{-1} $(y/x) works well for finding f$ _R $. Often, W = X works well since

References

- M. Comer. ECE 600. Class Lecture. Random Variables and Signals. Faculty of Electrical Engineering, Purdue University. Fall 2013.

Questions and comments

If you have any questions, comments, etc. please post them on this page