| Line 50: | Line 50: | ||

So if X and Y are independent, and Z = X + Y, we can find f<math>_Z</math> by convolving f<math>_X</math> and f<math>_Y</math>. | So if X and Y are independent, and Z = X + Y, we can find f<math>_Z</math> by convolving f<math>_X</math> and f<math>_Y</math>. | ||

| − | '''Example''' <math>\qquad</math> | + | '''Example''' <math>\qquad</math> X and Y are independent exponential random variables with means <math>\mu_X</math> = <math>\mu_Y</math> = <math>\mu</math>. So,<br/> |

| + | <center><math>\begin{align} | ||

| + | f_Z(z)&=\int_{-\infty}^{\infty}\frac{1}{\mu}e^{-\frac{x}{\mu}}u(x)\frac{1}{\mu}e^{-\frac{(z-x}{\mu}}u(z-x)dx \\ | ||

| + | &=\int_0^z\frac{1}{\mu^2}e^{-\frac{z}{\mu}}dx\;\;\mbox{for}\;z=0 \\ | ||

| + | &=\frac{z}{\mu^2}e^{-\frac{z}{\mu}}u(z) | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | So the sum of two independent exponential random variables is not an exponential random variable. | ||

| + | |||

| + | |||

| + | ---- | ||

| + | |||

| + | ==Two Functions of Two Random Variables== | ||

| + | |||

| + | Given two random variables X and Y, cobsider random variables Z and W defined as follows: <br/> | ||

| + | <center><math>\begin{align} | ||

| + | Z &= g(X,Y) \\ | ||

| + | W &= h(X,Y) | ||

| + | \end{align}</math></center> | ||

| + | where g:'''R'''<math>^2</math>→'''R''' and h:'''R'''<math>^2</math>→'''R'''. | ||

| + | |||

| + | For example, if we have a linear transformation, then <br/> | ||

| + | <center><math>\begin{bmatrix}Z\\W\end{bmatrix} =A \begin{bmatrix}X\\Y\end{bmatrix}</math></center> | ||

| + | where A is a 2x2 matrix. In this case, <br/> | ||

| + | <center><math>\begin{align} | ||

| + | g(x,y) &= a_{11}x+a_{12}y \\ | ||

| + | h(x,y) &= a_{21}x+a_{22}y | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | where <br/> | ||

| + | <center><math>A=\begin{bmatrix} | ||

| + | a_{11} & a_{12} \\ | ||

| + | a_{21} & a_{22} | ||

| + | \end{bmatrix}</math></center> | ||

| + | |||

| + | How do we find f<math>_{ZW}</math>? | ||

| + | |||

| + | If we could find D<math>_{z,w}</math> = {(x,y) ∈ '''R'''<math>^2</math>: g(x,y) ≤ z and h(x,y) ≤ w} for every (z,w) ∈ '''R'''<math>^2</math>, then we could compute <br/> | ||

| + | <center><math>\begin{align} | ||

| + | F_{ZW}(z,w) &= p(Z\leq z, W\leq w) \\ | ||

| + | &=P((X,Y)\in D_{z,w}) \\ | ||

| + | &=\int\int_{D_{z,w}}f_{XY}(x,y)dxdy | ||

| + | \end{align}</math></center> | ||

| + | |||

| + | It is often quite difficult to find D<math>_{z,w}</math>, so we use a formula for f<math>_{ZW}</math> instead. | ||

| + | |||

| + | |||

| + | ==Formula for Joint pdf f<math>ZW</math>== | ||

| + | |||

| + | Assume that the functions g and h satisfy | ||

| + | * z = g(x,y) and w = h(x,y) can be solved simultaneously for unique x and y. We will write x = g<math>^{-1}</math>(z,w) and y = h<math>^{-1}</math>(z,w) where g<math>^{-1}</math>:'''R'''<math>^2</math>→'''R''' and h<math>^{-1}</math>:'''R'''<math>^2</math>→'''R'''. | ||

| + | :For the linear transformation example, this means we assume A<math>^{-1}</math> exists (i.e. it is invertible). In this case <br/> | ||

| + | <center><math>\begin{align} | ||

| + | g^{-1}(z,w) &= b_{11}z+b_{12}w \\ | ||

| + | h^{-1}(z,w) &= b_{21}z+b_{22}w | ||

| + | \end{align}</math></center> | ||

| + | :where <br/> | ||

| + | <center><math>A^{-1}=\begin{bmatrix} | ||

| + | b_{11} & b_{12} \\ | ||

| + | b_{21} & b_{22} | ||

| + | \end{bmatrix}</math></center> | ||

| + | *The partial derivatives <br/> | ||

| + | <center><math>\frac{\partial x}{\partial z},\;\frac{\partial x}{\partial w},\;\frac{\partial y}{\partial z},\;\frac{\partial y}{\partial w},\;\frac{\partial z}{\partial x},\;\frac{\partial z}{\partial y},\;\frac{\partial w}{\partial x},\;\frac{\partial w}{\partial y}</math></center> | ||

| + | :exist. | ||

| + | |||

| + | Then it can be shown that <br/> | ||

| + | <center><math>f_{ZW}(z,w) = \frac{f_{XY}(g^{-1}(z,w),h^{-1}(z,w))}{\left|\frac{\partial\left(z,w\right)}{\partial\left(x,y\right)}\right|}</math></center> | ||

| + | |||

| + | where<br/> | ||

| + | <center><math> | ||

| + | \begin{align} | ||

| + | \left|\frac{\partial(z,w)}{\partial(x,y)}\right| &\equiv | ||

| + | \left|\begin{bmatrix} | ||

| + | \frac{\partial z}{\partial x} & \frac{\partial z}{\partial y} \\ | ||

| + | \frac{\partial w}{\partial x} & \frac{\partial w}{\partial y} \end{bmatrix}\right| \\ | ||

| + | &= \left|\frac{\partial z}{\partial x}\frac{\partial w}{\partial y}-\frac{\partial z}{\partial y}\frac{\partial w}{\partial x} \right| | ||

| + | \end{align}</math></center> | ||

| + | <center><math>z=g(x,y)\qquad x=h(x,y)</math></center> | ||

| + | |||

| + | Note that we take the absolute value of the determinant. This is called the Jacobian of the transformation. For more on the Jacobian, see [[Jacobian|here]]. | ||

| + | |||

| + | The proof for the above formula is in Papoulis. | ||

| + | |||

| + | |||

| + | '''Example''' | ||

Revision as of 21:09, 4 November 2013

Random Variables and Signals

Topic 13: Functions of Two Random Variables

One Function of Two Random Variables

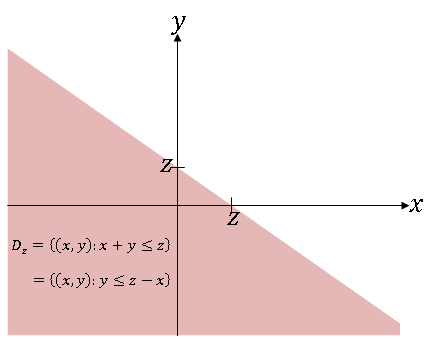

Given random variables X and Y and a function g:R$ ^2 $→R, let Z = g(X,Y). What is f$ _Z $(z)?

We assume that Z is a valid random variable, so that ∀z ∈ R, there is a D$ _z $ ∈ b(R$ ^2 $) such that

i.e.

Then,

and we can find f$ _Z $ from this.

Example $ \qquad $ g(x,y) = x + y. So Z = X + Y. Here,

and

If we now assume that X and Y are independent, then

Then,

So if X and Y are independent, and Z = X + Y, we can find f$ _Z $ by convolving f$ _X $ and f$ _Y $.

Example $ \qquad $ X and Y are independent exponential random variables with means $ \mu_X $ = $ \mu_Y $ = $ \mu $. So,

So the sum of two independent exponential random variables is not an exponential random variable.

Two Functions of Two Random Variables

Given two random variables X and Y, cobsider random variables Z and W defined as follows:

where g:R$ ^2 $→R and h:R$ ^2 $→R.

For example, if we have a linear transformation, then

where A is a 2x2 matrix. In this case,

where

How do we find f$ _{ZW} $?

If we could find D$ _{z,w} $ = {(x,y) ∈ R$ ^2 $: g(x,y) ≤ z and h(x,y) ≤ w} for every (z,w) ∈ R$ ^2 $, then we could compute

It is often quite difficult to find D$ _{z,w} $, so we use a formula for f$ _{ZW} $ instead.

Formula for Joint pdf f$ ZW $

Assume that the functions g and h satisfy

- z = g(x,y) and w = h(x,y) can be solved simultaneously for unique x and y. We will write x = g$ ^{-1} $(z,w) and y = h$ ^{-1} $(z,w) where g$ ^{-1} $:R$ ^2 $→R and h$ ^{-1} $:R$ ^2 $→R.

- For the linear transformation example, this means we assume A$ ^{-1} $ exists (i.e. it is invertible). In this case

- where

- The partial derivatives

- exist.

Then it can be shown that

where

Note that we take the absolute value of the determinant. This is called the Jacobian of the transformation. For more on the Jacobian, see here.

The proof for the above formula is in Papoulis.

Example