| Line 6: | Line 6: | ||

</font size> | </font size> | ||

| − | <font size= 3> Topic 9: | + | <font size= 3> Topic 9: Expectation</font size> |

</center> | </center> | ||

Revision as of 19:15, 10 November 2013

Random Variables and Signals

Topic 9: Expectation

Thus far, we have learned how to represent the probabilistic behavior or random variables X using the density function f$ _X $ or the mass function p$ _X $.

Sometimes, we want to describe X probabilistically using only a small number of parameters. The expectation is often used to do this.

Definition $ \qquad $ the expected value of continuous random variable X is defined as

Definition $ \qquad $ the expected value of discrete random variable X is defined as

where $ R_X $ is the range space of X.

Note:

- E[X] is also known as the mean of X. Other notation for E[X] include:

- The equation defining E[X] for discrete X could have been derived from the continuous X, using the density function f$ _X $ containing $ \delta $-functions.

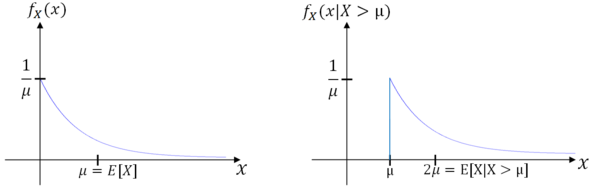

Example $ \qquad $ X is an exponential random variable. find E[X].

Let $ \mu = 1/\lambda $. We often write

Example $ \qquad $ X is a uniform discrete random varibable with $ R_X $ = {1,...,n}. Then,

Having defined E[X], we will now consider more general E[g(X)] for a function g:R → R.

Let Y = g(X). What is E[Y]? From previous definitions:

or

We can find this by first finding f$ _Y $ or p$ _Y $ in terms of g and f$ _X,/math> or p<math>_X $. Alternatively, it can be shown that

or

See Papoulis for the proof of the above.

Two important cases or functions g:

- g(x) = x. Then E[g(X)] = E[X]

- g(x) = (x - $ \mu_X)^2 $. Then E[g(X)] = E[(X - $ \mu_X)^2 $]

or

Note: $ \qquad $ E[(X - $ \mu_X)^2 $] is called the variance of X and is often denoted $ \sigma_X $$ ^2 $. $ \sigma_X $ is called the standard deviation of X.

Important property of E[]:

Let g$ _1 $:R → R; g$ _2 $:R → R; $ \alpha,\beta $ ∈ R, Then

So E[] is a linear operator. The proof follows from the linearity of integration.

Important property of Var():

Proof:

Example $ \qquad $ X is Gaussian N($ \mu,\sigma^2 $). Find E[X} and Var(X).

Let r = x - $ \mu $. Then

First term: Integrating an odd function over (-∞,∞) ⇒ first term is 0.

Second term: Integrating a Gaussian pdf over (-∞,∞) gives one ⇒ second term is $ \mu $.

So E[X] = $ \mu $

Using integration by parts, we see that this integral evaluates to $ \sigma^2+\mu^2 $. So,

Example $ \qquad $ X is Poisson with parameter $ \lambda $. Find E[X] and Var(X).

So,

$ E[X^2] = \lambda^2 +\lambda \ $

$ \Rightarrow Var(X) = \lambda^2 +\lambda - \lambda = \lambda \ $

Moments

Moments generalize mean and variance to nth order expectations.

Definition $ \qquadd $ the nth order moment of random variable X is

and the nth central moment of X is

So

- $ \mu_1 $ = E[X] mean

- $ \mu_2 $ = E[X$ ^2 $] mean-square

- v$ _2 $ = Var(X) variance

Conditional Expectation

For an event M ∈ F with P(M) > 0.

or

Example $ \qquad $ X is an exponential random variable. Let M = {X > $ \mu $}. Find E[X|M]. Note that P(M) = P(X > $ \mu $) and since $ \mu $ > 0,

It can be shown that

Then,

References

- M. Comer. ECE 600. Class Lecture. Random Variables and Signals. Faculty of Electrical Engineering, Purdue University. Fall 2013.

Questions and comments

If you have any questions, comments, etc. please post them on this page