| Line 20: | Line 20: | ||

</math> | </math> | ||

| − | Our input function '''x''' is a constant 1. You can think of this as a (Kronecker) delta function occurring at every discrete time step, and therefore causing the impulse reponse to 'go off' at every time step (see graphs below for better visualization). What we term the 'convolution' is just the summation of all these time-shifted impulse reponses that have non-zero outputs at the time we want to find the convolution for (ie time n for y[n]). To be more specific we can consider an impulse that was generated at time n=0. We know that output from that singular impulse response should be <math style='inline'>(1)*(e^{0}) = 1</math> at n=0, <math style='inline'>(1)*(e^{-1}) = (1/e^{-1})</math> at n=1, <math style='inline'>(1)*(e^{-2}) = (1/e^{-2})</math> at n=2, and so on. Without even using to the convolution formula given to us in class, we can intuitively grasp what the convolution should be for any time n (doesn't matter what n we choose since input has always been and will always be the same). It should be the | + | Our input function '''x''' is a constant 1. You can think of this as a (Kronecker) delta function occurring at every discrete time step, and therefore causing the impulse reponse to 'go off' at every time step (see graphs below for better visualization). What we term the 'convolution' is just the summation of all these time-shifted impulse reponses that have non-zero outputs at the time we want to find the convolution for (ie time n for y[n]). To be more specific we can consider an impulse that was generated at time n=0. We know that output from that singular impulse response should be <math style='inline'>(1)*(e^{0}) = 1</math> at n=0, <math style='inline'>(1)*(e^{-1}) = (1/e^{-1})</math> at n=1, <math style='inline'>(1)*(e^{-2}) = (1/e^{-2})</math> at n=2, and so on. Another impulse would be generated at n=1, yielding <math style='inline'>(1)*(0) = 0</math> at n=0, <math style='inline'>(1)*(e^{0}) = 1</math> at n=1, <math style='inline'>(1)*(e^{-1}) = (1/e^{-1})</math> at n=2, and so on. Look at what's occurring at n=1 here: the convolution will yield <math style='inline'>1 + (1/e^{-1})</math> for the two impulse responses mentioned here. But if we carry this back to earlier impulse responses, we see that we get the geometric series: |

| + | |||

| + | <math> \sum_{k=0}{\infty} \mathrm{e}^{-k} \,=\, 1 + \frac{1}{e} + \frac{1}{e^2} + \frac{1}{e^3} + ... \,=\, \frac{1}{1-\frac{1}{e}}</math> | ||

| + | |||

| + | Without even using to the convolution formula given to us in class, we can intuitively grasp what the convolution should be for any time n (doesn't matter what n we choose since input has always been and will always be the same). It should be the | ||

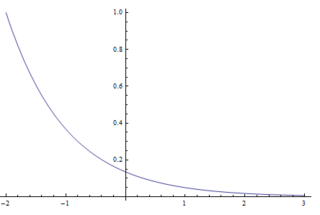

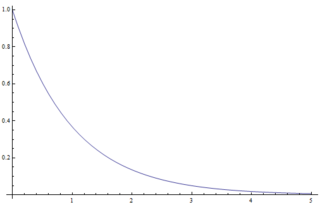

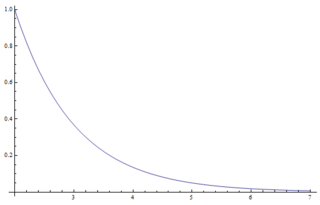

[[image:Ec1_2.PNG| 320x320px]][[image:Ec1_1.PNG| 320x320px]][[image:Ec1_3.PNG| 320x320px]] | [[image:Ec1_2.PNG| 320x320px]][[image:Ec1_1.PNG| 320x320px]][[image:Ec1_3.PNG| 320x320px]] | ||

Revision as of 21:19, 10 February 2013

Convolution

Convolution is often presented in a manner that emphasizes efficient calculation over comprehension of the convolution itself. To calculate in a pointwise fashion, we're told: "flip one of the input signals, and perform shift+multiply+add operations until the signals no longer overlap." This is numerically valid, but you could in fact calculate the convolution without flipping either signals. We'll perform the latter here for illustration. Consider the convolution of the following constant input and causal impulse reponse:

$ y[n] \,=\, x[n] \ast h[n] $

$ x[n] \,=\, 1 \;\;\;\;\; \forall \, n $

$ h[n] = \left\{ \begin{array}{lr} \mathrm{e}^{-n} & : n \geq 0\\ 0 & : n < 0 \end{array} \right. $

Our input function x is a constant 1. You can think of this as a (Kronecker) delta function occurring at every discrete time step, and therefore causing the impulse reponse to 'go off' at every time step (see graphs below for better visualization). What we term the 'convolution' is just the summation of all these time-shifted impulse reponses that have non-zero outputs at the time we want to find the convolution for (ie time n for y[n]). To be more specific we can consider an impulse that was generated at time n=0. We know that output from that singular impulse response should be $ (1)*(e^{0}) = 1 $ at n=0, $ (1)*(e^{-1}) = (1/e^{-1}) $ at n=1, $ (1)*(e^{-2}) = (1/e^{-2}) $ at n=2, and so on. Another impulse would be generated at n=1, yielding $ (1)*(0) = 0 $ at n=0, $ (1)*(e^{0}) = 1 $ at n=1, $ (1)*(e^{-1}) = (1/e^{-1}) $ at n=2, and so on. Look at what's occurring at n=1 here: the convolution will yield $ 1 + (1/e^{-1}) $ for the two impulse responses mentioned here. But if we carry this back to earlier impulse responses, we see that we get the geometric series:

$ \sum_{k=0}{\infty} \mathrm{e}^{-k} \,=\, 1 + \frac{1}{e} + \frac{1}{e^2} + \frac{1}{e^3} + ... \,=\, \frac{1}{1-\frac{1}{e}} $

Without even using to the convolution formula given to us in class, we can intuitively grasp what the convolution should be for any time n (doesn't matter what n we choose since input has always been and will always be the same). It should be the

$ \int_{-\infty}^{\infty} h(\tau)\,\mathrm{d}\tau \,=\, \int_{0}^{\infty} h(\tau)\,\mathrm{d}\tau \;\;\;\;\; \because h(t)=0 \;\;\; \forall \, t<0 $

$ \Rightarrow \int_{0}^{\infty} \mathrm{e}^{-\tau}\,\mathrm{d}\tau \,=\, \left.-\mathrm{e}^{-\tau}\right|_{0}^{\infty} \,=\, -(\mathrm{e}^{-\infty} - \mathrm{e}^{0}) \,=\, -(0 - 1) \,=\, 1 $

$ \int_{-\infty}^{\infty} h(t-\tau)\,\mathrm{d}\tau \,=\, \int_{-\infty}^{t} h(t-\tau)\,\mathrm{d}\tau \;\;\;\;\; \because h(t)=0 \;\;\; \forall \, t<0 $

$ \Rightarrow \int_{-\infty}^{t} \mathrm{e}^{-(t-\tau)}\,\mathrm{d}\tau \,=\, \int_{-\infty}^{t} \mathrm{e}^{\tau-t}\,\mathrm{d}\tau \,=\, \left.\mathrm{e}^{\tau-t}\right|_{-\infty}^{t} \,=\, \mathrm{e}^{0} - \mathrm{e}^{-\infty-t} \;\; (\forall \, t>0) \,=\, 1 - 0 \,=\, 1 $