| Line 2: | Line 2: | ||

[[Image:mixture_OldKiwi.jpg]] | [[Image:mixture_OldKiwi.jpg]] | ||

| + | |||

| + | As can be seen from the figures, algorithm, starts with randomly initialized parameters. At each iteration, based on the current parameters, it clusters the data. Using that clustered data, the algorithm computes the parameters again. The process continues until convergence. | ||

Latest revision as of 18:23, 17 April 2008

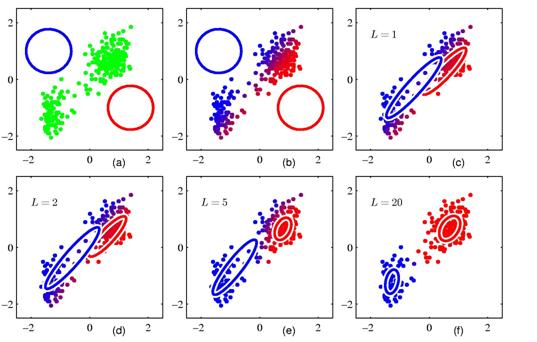

Here is a illustration of Expectation maximization Algorithm for Gaussian mixtures from C. M. Bishop's book.

As can be seen from the figures, algorithm, starts with randomly initialized parameters. At each iteration, based on the current parameters, it clusters the data. Using that clustered data, the algorithm computes the parameters again. The process continues until convergence.