| Line 8: | Line 8: | ||

by Joseph Ruan, proud Member of [[Math_squad | the Math Squad]]. | by Joseph Ruan, proud Member of [[Math_squad | the Math Squad]]. | ||

| − | + | ||

---- | ---- | ||

| Line 29: | Line 29: | ||

==== Example #1: ==== | ==== Example #1: ==== | ||

| − | What would be the Jacobian Matrix of this Transformation? <font size=4><math>T(u,v) = <u | + | What would be the Jacobian Matrix of this Transformation? <font size=4><math>T(u,v) = <u\times \cos v, u\times \sin v> </math> </font>. |

===Solution:=== | ===Solution:=== | ||

<font size = 4> | <font size = 4> | ||

| − | <math>x=u | + | <math>x=u \times \cos v \longrightarrow \frac{\partial x}{\partial u}= \cos v , \; \frac{\partial x}{\partial v} = -u\times \sin v</math> |

| − | <math>y=u | + | <math>y=u\times\sin v \longrightarrow \frac{\partial y}{\partial u}= \sin v , \; \frac{\partial y}{\partial v} = u\times \cos v</math> |

</font> | </font> | ||

| Line 44: | Line 44: | ||

\frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= | \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= | ||

\begin{bmatrix} | \begin{bmatrix} | ||

| − | \cos v & -u | + | \cos v & -u\times \sin v \\ |

| − | \sin v & u | + | \sin v & u\times \cos v \end{bmatrix} |

</math> | </math> | ||

| Line 65: | Line 65: | ||

<math>y=v \longrightarrow \frac{\partial y}{\partial u}=0 , \; \frac{\partial y}{\partial v} = 1</math> | <math>y=v \longrightarrow \frac{\partial y}{\partial u}=0 , \; \frac{\partial y}{\partial v} = 1</math> | ||

| − | <math>z=u^v \longrightarrow \frac{\partial y}{\partial u}= u^{v-1} | + | <math>z=u^v \longrightarrow \frac{\partial y}{\partial u}= u^{v-1}\times v, \; \frac{\partial y}{\partial v} = u^v\times ln(u)</math> |

</font> | </font> | ||

| Line 75: | Line 75: | ||

1 & 0 \\ | 1 & 0 \\ | ||

0 & 1 \\ | 0 & 1 \\ | ||

| − | u^{v-1} | + | u^{v-1}\times v & u^v\times ln(u)\end{bmatrix} |

</math> | </math> | ||

| Line 89: | Line 89: | ||

Notice, that this matrix will not be square because there is a difference in dimensions of the input and output, i.e. the transformation is not injective. | Notice, that this matrix will not be square because there is a difference in dimensions of the input and output, i.e. the transformation is not injective. | ||

| − | <font size = 4><math>x=\tan(uv) \longrightarrow \frac{\partial x}{\partial u}= \sec^2(uv) | + | <font size = 4><math>x=\tan(uv) \longrightarrow \frac{\partial x}{\partial u}= \sec^2(uv)\times v, \; \frac{\partial x}{\partial v} = sec^2(uv)\times u</math></font> |

| − | <math>J(u,v)= \begin{bmatrix}\frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \end{bmatrix}=\begin{bmatrix}\sec^2(uv) | + | <math>J(u,v)= \begin{bmatrix}\frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \end{bmatrix}=\begin{bmatrix}\sec^2(uv)\times v & sec^2(uv)\times u \end{bmatrix}</math> |

| Line 100: | Line 100: | ||

<font size=5> <math> \left|\begin{matrix} | <font size=5> <math> \left|\begin{matrix} | ||

| − | \cos v & -u | + | \cos v & -u \times \sin v \\ |

| − | \sin v & u | + | \sin v & u \times \cos v \end{matrix}\right|=~~ u \cos^2 v + u \sin^2 v =~~ u </math></font> |

Notice that, in an integral when changing from cartesian coordinates (dxdy) to polar coordinates <math> (drd\theta)</math>, the equation is as such: | Notice that, in an integral when changing from cartesian coordinates (dxdy) to polar coordinates <math> (drd\theta)</math>, the equation is as such: | ||

| − | <font size=4><math> dxdy=r | + | <font size=4><math> dxdy=r\times drd\theta=u\times dudv </math></font> |

It is easy to extrapolate, then, that the transformation from one set of coordinates to another set is merely | It is easy to extrapolate, then, that the transformation from one set of coordinates to another set is merely | ||

| Line 121: | Line 121: | ||

This is the general idea behind change of variables. It is easy to that the jacobian method matches up with one-dimensional change of variables: | This is the general idea behind change of variables. It is easy to that the jacobian method matches up with one-dimensional change of variables: | ||

| − | <font size=5><math>T(u)=u^2=x ~~, </math><math>~~~~~~~~ J(u)=\begin{bmatrix}\frac{\partial x}{\partial u}\end{bmatrix}=\begin{bmatrix}2u\end{bmatrix} </math><math>,~~~~~~~ dx=\left|J(u)\right| | + | <font size=5><math>T(u)=u^2=x ~~, </math><math>~~~~~~~~ J(u)=\begin{bmatrix}\frac{\partial x}{\partial u}\end{bmatrix}=\begin{bmatrix}2u\end{bmatrix} </math><math>,~~~~~~~ dx=\left|J(u)\right|\times du=2u\times du </math> |

</font> | </font> | ||

Revision as of 14:05, 8 May 2013

Contents

Jacobians and their applications

by Joseph Ruan, proud Member of the Math Squad.

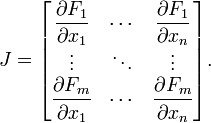

Basic Definition

The Jacobian Matrix is just a matrix that takes the partial derivatives of each element of a transformation. In general, the Jacobian Matrix of a transformation F, looks like this:

F1,F2, F3... are each of the elements of the output vector and x1,x2, x3 ... are each of the elements of the input vector.

F1,F2, F3... are each of the elements of the output vector and x1,x2, x3 ... are each of the elements of the input vector.

So for example, in a 2 dimensional case, let T be a transformation such that T(u,v)=<x,y> then the Jacobian matrix of this function would look like this:

$ J(u,v)=\begin{bmatrix} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix} $

This Jacobian matrix noticably holds all of the partial derivatives of the transformation with respect to each of the variables. Therefore each row contains how a particular output element changes with respect to each of the input elements. This means that the Jacobian matrix contains vectors that help describe how a change in any of the input elements affects the output elements.

To help illustrate making Jacobian matrices, let's do some examples:

Example #1:

What would be the Jacobian Matrix of this Transformation? $ T(u,v) = <u\times \cos v, u\times \sin v> $ .

Solution:

$ x=u \times \cos v \longrightarrow \frac{\partial x}{\partial u}= \cos v , \; \frac{\partial x}{\partial v} = -u\times \sin v $

$ y=u\times\sin v \longrightarrow \frac{\partial y}{\partial u}= \sin v , \; \frac{\partial y}{\partial v} = u\times \cos v $

$ J(u,v)=\begin{bmatrix} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \end{bmatrix}= \begin{bmatrix} \cos v & -u\times \sin v \\ \sin v & u\times \cos v \end{bmatrix} $

Example #2:

What would be the Jacobian Matrix of this Transformation?

$ T(u,v) = <u, v, u^v>,u>0 $ .

Solution:

Notice, that this matrix will not be square because there is a difference in dimensions of the input and output, i.e. the transformation is not injective.

$ x=u \longrightarrow \frac{\partial x}{\partial u}= 1 , \; \frac{\partial x}{\partial v} = 0 $

$ y=v \longrightarrow \frac{\partial y}{\partial u}=0 , \; \frac{\partial y}{\partial v} = 1 $

$ z=u^v \longrightarrow \frac{\partial y}{\partial u}= u^{v-1}\times v, \; \frac{\partial y}{\partial v} = u^v\times ln(u) $

$ J(u,v)=\begin{bmatrix} \frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \\ \frac{\partial y}{\partial u} & \frac{\partial y}{\partial v} \\ \frac{\partial z}{\partial u} & \frac{\partial z}{\partial v} \end{bmatrix}= \begin{bmatrix} 1 & 0 \\ 0 & 1 \\ u^{v-1}\times v & u^v\times ln(u)\end{bmatrix} $

Example #3:

What would be the Jacobian Matrix of this Transformation?

$ T(u,v) = <\tan (uv)> $ .

Solution:

Notice, that this matrix will not be square because there is a difference in dimensions of the input and output, i.e. the transformation is not injective.

$ x=\tan(uv) \longrightarrow \frac{\partial x}{\partial u}= \sec^2(uv)\times v, \; \frac{\partial x}{\partial v} = sec^2(uv)\times u $

$ J(u,v)= \begin{bmatrix}\frac{\partial x}{\partial u} & \frac{\partial x}{\partial v} \end{bmatrix}=\begin{bmatrix}\sec^2(uv)\times v & sec^2(uv)\times u \end{bmatrix} $

Application: Jacaobian Determinants

The determinant of Example #1 gives:

$ \left|\begin{matrix} \cos v & -u \times \sin v \\ \sin v & u \times \cos v \end{matrix}\right|=~~ u \cos^2 v + u \sin^2 v =~~ u $

Notice that, in an integral when changing from cartesian coordinates (dxdy) to polar coordinates $ (drd\theta) $, the equation is as such:

$ dxdy=r\times drd\theta=u\times dudv $

It is easy to extrapolate, then, that the transformation from one set of coordinates to another set is merely

$ dC1=det(J(T))dC2 $

where C1 is the first set of coordinates, det(J(C1)) is the determinant of the Jacobian matrix made from the Transformation T, T is the Transformation from C1 to C2 and C2 is the second set of coordinates.

It is important to notice several aspects: first, the determinant is assumed to exist and be non-zero, and therefore the Jacobian matrix must be square and invertible. This makes sense because when changing coordinates, it should be possible to change back.

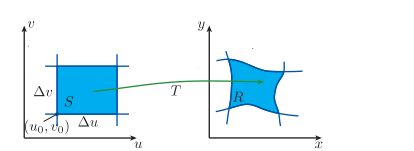

Moreover, recall from linear algebra that, in a two dimensional case, the determinant of a matrix of two vectors describes the area of the parallelogram drawn by it, or more accurately, it describes the scale factor between the unit square and the area of the parallelogram. If we extend the analogy, the determinant of the Jacobian would describe some sort of scale factor change from one set of coordinates to the other. Here is a picture that should help:

This is the general idea behind change of variables. It is easy to that the jacobian method matches up with one-dimensional change of variables:

$ T(u)=u^2=x ~~, $$ ~~~~~~~~ J(u)=\begin{bmatrix}\frac{\partial x}{\partial u}\end{bmatrix}=\begin{bmatrix}2u\end{bmatrix} $$ ,~~~~~~~ dx=\left|J(u)\right|\times du=2u\times du $

Example #4:

Compute the following expression:

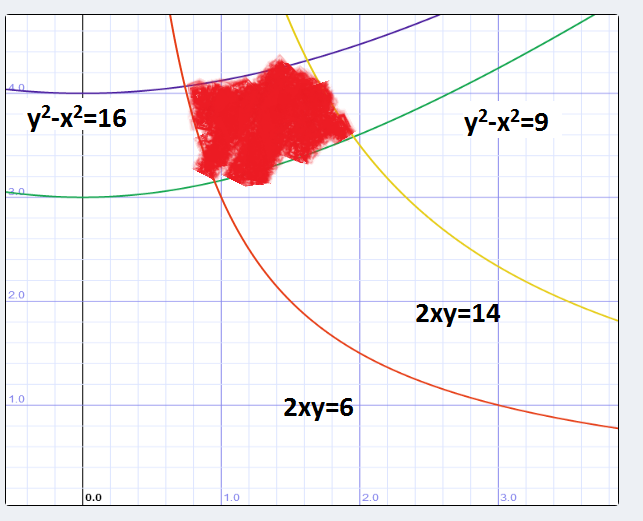

$ \int(x^2+y^2)dA $ where dA is the region bounded by x2-y2=9, x2-y2=16, 2xy=14, 2xy=6.

Solution:

First, let's graph the region.

Sources:

Rhea's Summer 2013 Math Squad was supported by an anonymous gift. If you enjoyed reading this tutorial, please help Rhea "help students learn" with a donation to this project. Your contribution is greatly appreciated.