(New page: A ''''Naive Bayes''' or '''Naïve Bayes''' classifier is a classifier designed with a simple yet powerful assumption: that within each class, the measured variables are independent. For e...) |

(Added discussion of figures.) |

||

| Line 1: | Line 1: | ||

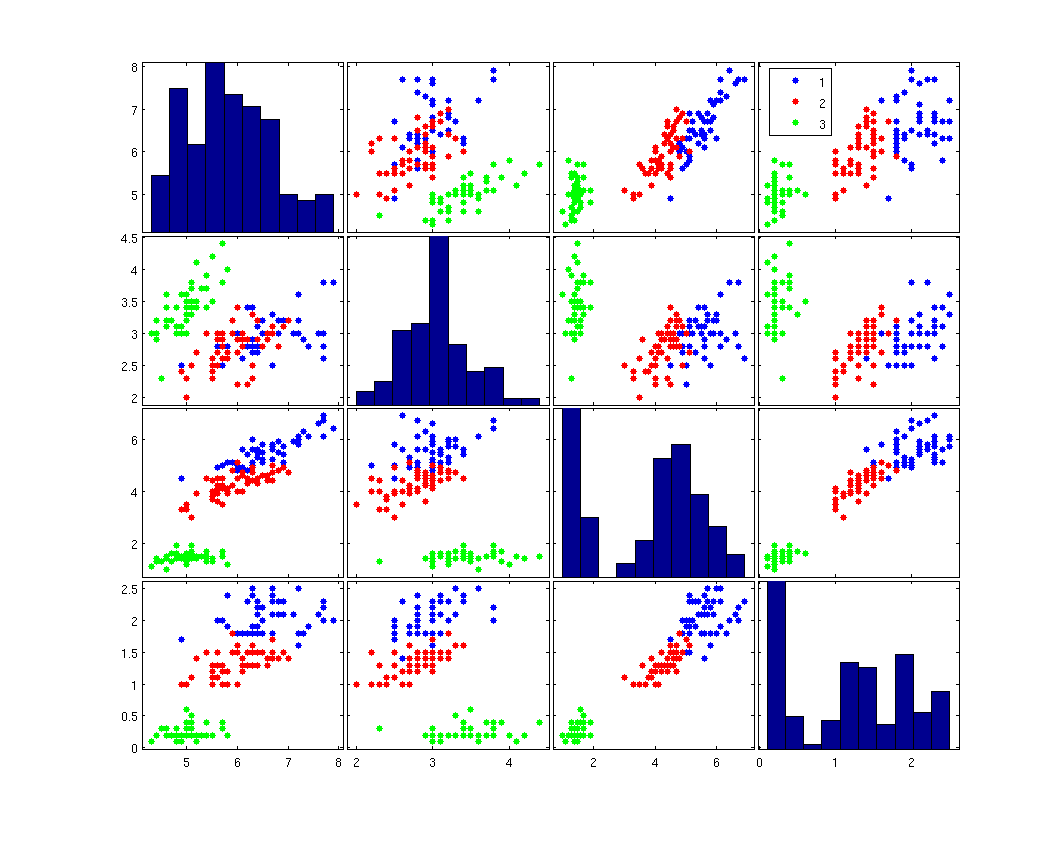

A ''''Naive Bayes''' or '''Naïve Bayes''' classifier is a classifier designed with a simple yet powerful assumption: that within each class, the measured variables are independent. For example, consider the famous [http://en.wikipedia.org/wiki/Iris_flower_data_set Iris data set], which contains various dimensions measured from various flowers of the Iris family. A Naive Bayes classifier will assume that within each class, the irises are all different, as illustrated in the second figure | A ''''Naive Bayes''' or '''Naïve Bayes''' classifier is a classifier designed with a simple yet powerful assumption: that within each class, the measured variables are independent. For example, consider the famous [http://en.wikipedia.org/wiki/Iris_flower_data_set Iris data set], which contains various dimensions measured from various flowers of the Iris family. A Naive Bayes classifier will assume that within each class, the irises are all different, as illustrated in the second figure | ||

| + | |||

| + | Here is the original [http://en.wikipedia.org/wiki/Iris_flower_data_set Iris data set], plotted in pairs of variables | ||

[[Image: iris_Old Kiwi.png]] | [[Image: iris_Old Kiwi.png]] | ||

| − | |||

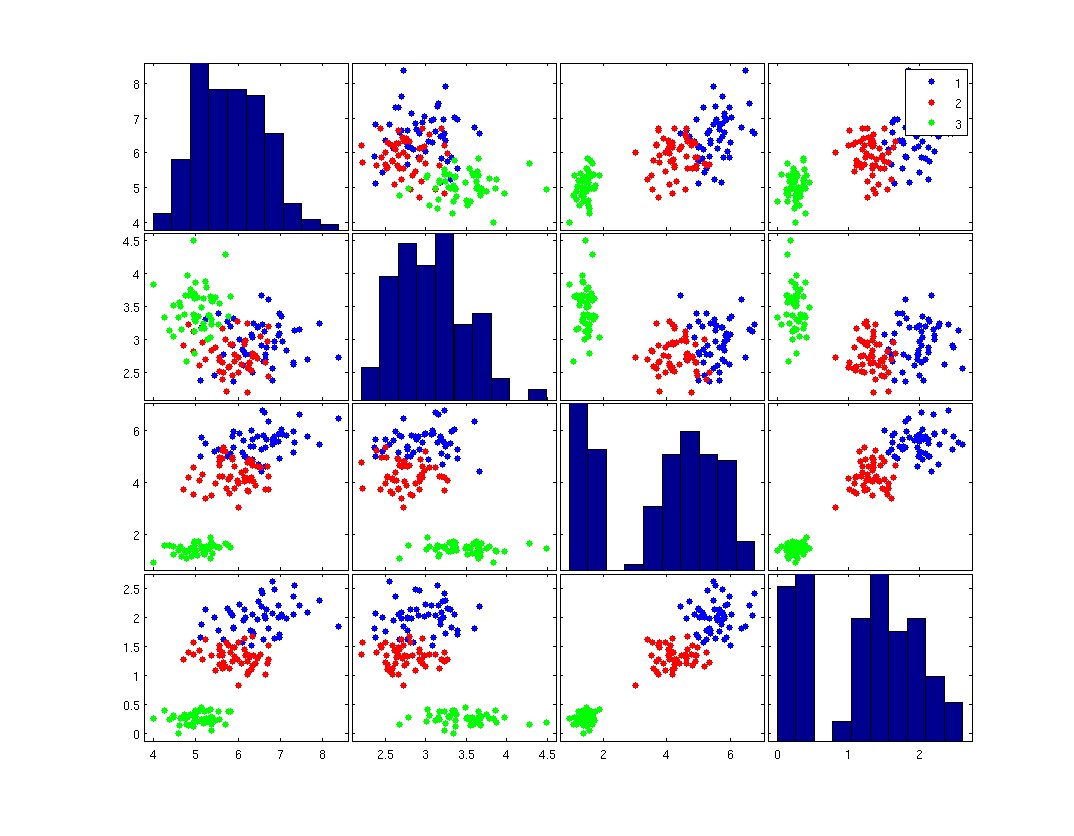

| + | Compare this to a synthetic data set, which is designed to have the same standard deviation and mean for each class -- when considering one dimension at a time -- but which assumes the dimensions are independent. | ||

| + | |||

| + | [[Image: iris_synth_Old Kiwi.png]] | ||

| + | In this figure, we can see that sometimes the Naive Bayes assumption is good, and sometimes it is not. When the data are not correlated, as in the bottom left figure, Naive Bayes gives a very similar distribution. When the data are strongly correlated, as in the figure in the second row, fourth column, Naive Bayes will probably lead to a poor classifier. Curiously, there are times where the data are strongly correlated, but Naive Bayes will likely give the same classifier as an ideal discriminator. Consider the figure in the fourth (bottom) row and third column. Here, both Naive Bayes and an ideal classifier will probably produce a line perpendicular to the distance between the means. | ||

| − | + | [[Fisher's Linear Discriminant_Old Kiwi]], is ideal if both classes are Gaussian with the same distribution and priors. Naive Bayes classification with Gaussian class models will give the same results as Fisher's Linear Discriminant when the dimensions are independent. It may give results that are very close even if the dimensions are not independent. | |

Revision as of 12:43, 7 April 2008

A 'Naive Bayes or Naïve Bayes classifier is a classifier designed with a simple yet powerful assumption: that within each class, the measured variables are independent. For example, consider the famous Iris data set, which contains various dimensions measured from various flowers of the Iris family. A Naive Bayes classifier will assume that within each class, the irises are all different, as illustrated in the second figure

Here is the original Iris data set, plotted in pairs of variables

Compare this to a synthetic data set, which is designed to have the same standard deviation and mean for each class -- when considering one dimension at a time -- but which assumes the dimensions are independent.

In this figure, we can see that sometimes the Naive Bayes assumption is good, and sometimes it is not. When the data are not correlated, as in the bottom left figure, Naive Bayes gives a very similar distribution. When the data are strongly correlated, as in the figure in the second row, fourth column, Naive Bayes will probably lead to a poor classifier. Curiously, there are times where the data are strongly correlated, but Naive Bayes will likely give the same classifier as an ideal discriminator. Consider the figure in the fourth (bottom) row and third column. Here, both Naive Bayes and an ideal classifier will probably produce a line perpendicular to the distance between the means.

Fisher's Linear Discriminant_Old Kiwi, is ideal if both classes are Gaussian with the same distribution and priors. Naive Bayes classification with Gaussian class models will give the same results as Fisher's Linear Discriminant when the dimensions are independent. It may give results that are very close even if the dimensions are not independent.