Computer Engineering(CE)

Question 1: Algorithms

August 2013

Back to QE CE question 1, August 2013

Problem 3. The minimum bottle neck spanning tree (MBST) is a spanning tree that seeks to minimize the use of most expensive (the largest weight) edge in the tree. More specifically, for a tree $ T $ over a graph G, we define $ e $ to be a bottleneck edge of $ T $ if it's an edge with the largest weight. Note, multiple edges may have the same weight. The tree $ T $ is an MBST if it is a spanning tree and there is no other spanning tree of G with a cheaper bottleneck edge. Prove or disprove that an MBST for a graph G is always a minimum spanning tree for G.

Solution 1

A minimum bottle neck spanning tree (MBST) is not always a minimum spanning tree MST.

Proof: Suppose we have a spanning tree $ T $ which is a MBST of $ G $ , and $ T $ is also a MST. The bottle neck is edge $ e $ with weight $ w $. Then I have another vertex $ X $ that will be connected to the graph $ G $ to form a new graphs $ G' $. $ G' = G + X $. And the possible edges that connect graph $ G' $ and new vertex $ X $ has weights $ w_1, w_2, ... W_x $, and each is no greater than the bottle neck weight $ w $. Using any of the edges from $ w_1, w_2, ... W_x $, I get a new tree $ T' $. So $ T' $ is still a spanning tree, and its largest weight remains $ w $, so $ T' $ is also an MBST. However, among these edges that I can choose to span the tree, only the one that has the smallest weight will be a MST, by definition. So an MBST for a graph $ G' $ is not a minimum spanning tree for $ G' $. End of proof.

Solution 2

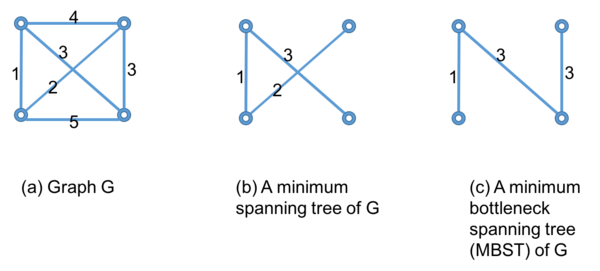

A MBST tree is not a minimum spanning tree for $ G $, ABST may not contain the lightest edge. A counter example is showing in figure below:

Comments on Solution 2:

This is a good counter example that showing MBST is not a MST.